MAK ONE Use Cases

See the types of simulation systems and components that our customers build with MAK ONE. For each, we identify where the MAK ONE products benefit the design. Explore the possibilities!

Collective Training in MAK ONE

Collective Training in MAK ONE

Collective training involves groups of soldiers—crews, teams, squads, platoons, companies, and larger units—learning, practicing, and demonstrating proficiency for tactical missions. It creates an environment where the actions of one participant impacts the training experience and environment of the others. Such training would be a useful exercise, for instance, for a helicopter pilot and convoy drivers who need to synchronize their efforts for a resupply mission.

Collective training expands and enhances the scope of training environments, pushing beyond individual skills training to develop cohesive, synchronized teams, leading to warfighters who better understand their role and contribution to the mission.

The MAK Advantage for Collective Training

The MAK ONE suite of simulation software provides all the tools to create a simulated collective training environment. While many customers choose to work solely from the MAK ONE platform, other customers use only the parts of the MAK ONE suite required to work with their existing software and training devices.

Here’s a look at how you can use MAK ONE for your collective training exercises:

Play the part: With VR-Engage, trainees play the role of a vehicle driver, gunner, or commander, the pilot of an airplane or helicopter, or a sensor operator. Connect separate VR-Engage driver, gunner, and commander stations to match the structure and responsibilities of a vehicle’s crew.

Play the part: With VR-Engage, trainees play the role of a vehicle driver, gunner, or commander, the pilot of an airplane or helicopter, or a sensor operator. Connect separate VR-Engage driver, gunner, and commander stations to match the structure and responsibilities of a vehicle’s crew.- Coordinate the exercise: While trainees participate in the exercise using VR-Engage role player stations, the instructor or operator uses the VR-Forces GUI to play a higher-level role like Battle Manager, inject MSEL (Master Scenario Event List) events to stimulate trainee responses, or to conduct the entire exercise for the trainees.

- Populate the environment: Use the powerful VR-Forces computer generated forces (CGF) application to simulate the non-player entities and events in training scenarios – including weapons, sensors, and AI behaviors. Add pattern-of-life activity to make the environment more realistic – including pedestrian and civilian vehicle, air, and shipping traffic; and simulate dynamic terrain changes – including craters, ditches, and berms.

- Emulate tactical missions: Simulate complex tactical scenarios with VR-Forces by adding intelligent CGF-controlled adjacent friendly and opposing forces. Trainees can coordinate with friendly units and must adapt to dynamic enemy behaviors, enhancing their decision-making and mission execution skills.

- Generate the terrain: The MAK Earth terrain engine, which is embedded into both VR-Engage and VR-Forces, imports and procedurally generates rich, high-fidelity terrain content that is suitable for ground simulation and correlated across the entire system. VR-TheWorld tiles and serves raster and vector source data to all applications.

- Network systems together: MAK's infrastructure tools have been connecting systems together for more than 30 years. With VR-Link and the MAK RTI, you can add third-party applications into a DIS/HLA federation for your collective training exercise. Use CIGI to drive our VR-Vantage image generator from your custom vehicle simulation host or drive your own IG from MAK’s VR-Engage player station*.

Fight the Mission with MAK

Used separately or together, MAK ONE tools have all the capabilities you need to play multi-crew collective training missions: Control a commander’s independent turret or perform a commander’s override of the gunner’s sight; switch between vision blocks, camera views, and IR / night vision; integrate with Command and Control systems for slew-to-cue commands; or execute hunter-killer operations with multiple coordinated crews.

Reach out to us at

*coming soon!

Sensor Operator Training

Sensor Operator Training with MAK ONE

Sensor operators and aircraft pilots provide critical intelligence and support during complex, sometimes dangerous missions. To qualify, they must have in-depth control of the ground control station sensor system and the tactical prowess required to work as a team during missions with unpredictable complications.

To train, MAK offers flexible and powerful simulation products designed to address the range of Sensor-Operator and Pilot training requirements. Here are a few examples:

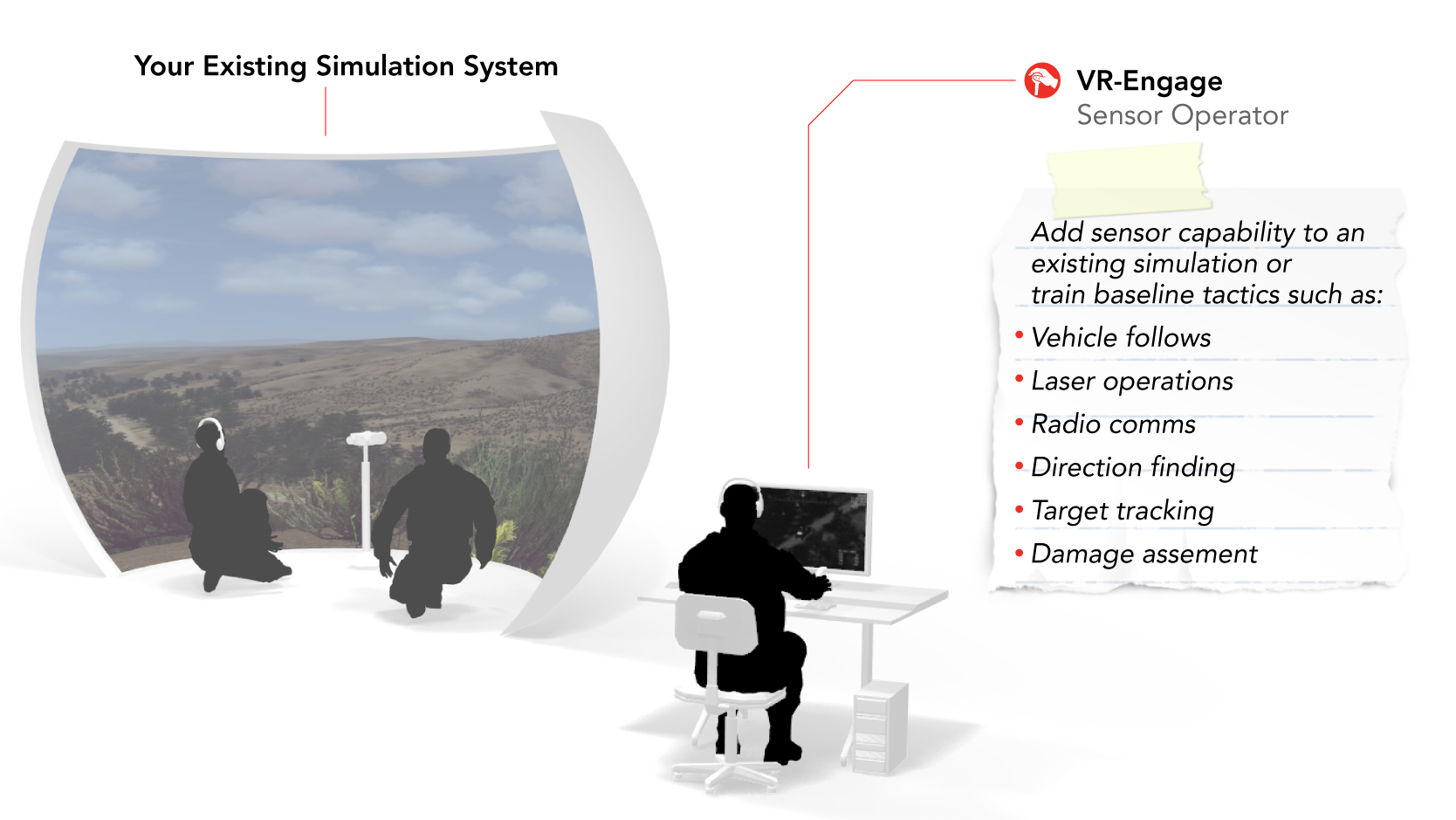

Use Case #1, Add ISR sensors to an existing training system:

Whether you want to add an airborne sensor asset to an existing exercise or to host a classroom full of beginner sensor operators, ![]() VR-Engage Sensor Operator offers a quick and easy way to integrate with simulation systems right out of the box as shown in Figure 1. Experienced participants can use it to provide intel from their payload vantage point and beginners can gain baseline training.

VR-Engage Sensor Operator offers a quick and easy way to integrate with simulation systems right out of the box as shown in Figure 1. Experienced participants can use it to provide intel from their payload vantage point and beginners can gain baseline training.

Figure 1: A Sensor Operator adds another view point to a JTAC system

All of MAK products are terrain- and protocol-agile, allowing you to leverage your existing capabilities while attaching a gimbaled sensor to any DIS or HLA entity in your existing simulation system.

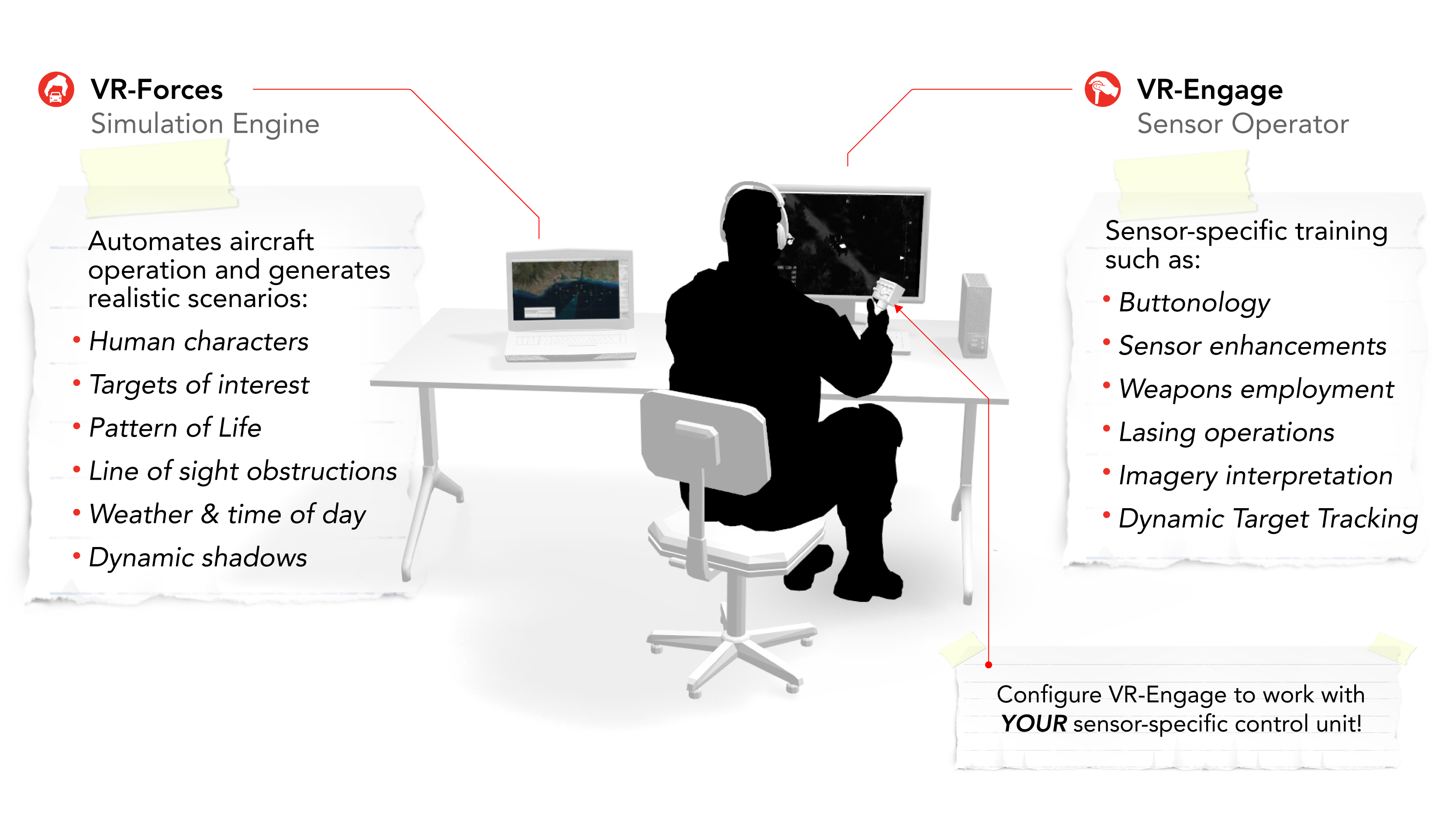

Use Case #2, Train in-depth system operation

Before operating a sensor system in the real world, operators need training for in-depth sensor system operation.

This training is done with the combination of ![]() VR-Forces as the simulation engine and

VR-Forces as the simulation engine and ![]() VR-Engage as the Sensor Operator role player station. VR-Forces is used to design scenarios that require the learning of essential skills in controlling the sensor gimbal. It provides a way to assign real-world Patterns Of Life and add specific behavioral patterns to human characters or crowds. Fill the world with intelligent, tactically significant characters (bad guys, civilians, and military personnel) to search for or track. Create targets, threats, triggers and events. VR-Forces is also the computer-assisted flight control for the sensor operator’s aircraft.

VR-Engage as the Sensor Operator role player station. VR-Forces is used to design scenarios that require the learning of essential skills in controlling the sensor gimbal. It provides a way to assign real-world Patterns Of Life and add specific behavioral patterns to human characters or crowds. Fill the world with intelligent, tactically significant characters (bad guys, civilians, and military personnel) to search for or track. Create targets, threats, triggers and events. VR-Forces is also the computer-assisted flight control for the sensor operator’s aircraft.

VR-Engage can be configured to use custom controllers and menu structures to mimic buttonology and emulate the physical gimbal controls. Adding ![]() SensorFX to VR-Engage will further enhance fidelity -- emulating the physics-based visuals so that they provide the same effects, enhancements, and optimizations as the actual sensor. In short, students can train on a replica of their system configuration.

SensorFX to VR-Engage will further enhance fidelity -- emulating the physics-based visuals so that they provide the same effects, enhancements, and optimizations as the actual sensor. In short, students can train on a replica of their system configuration.

Figure 2: Training to operate a particular sensor device

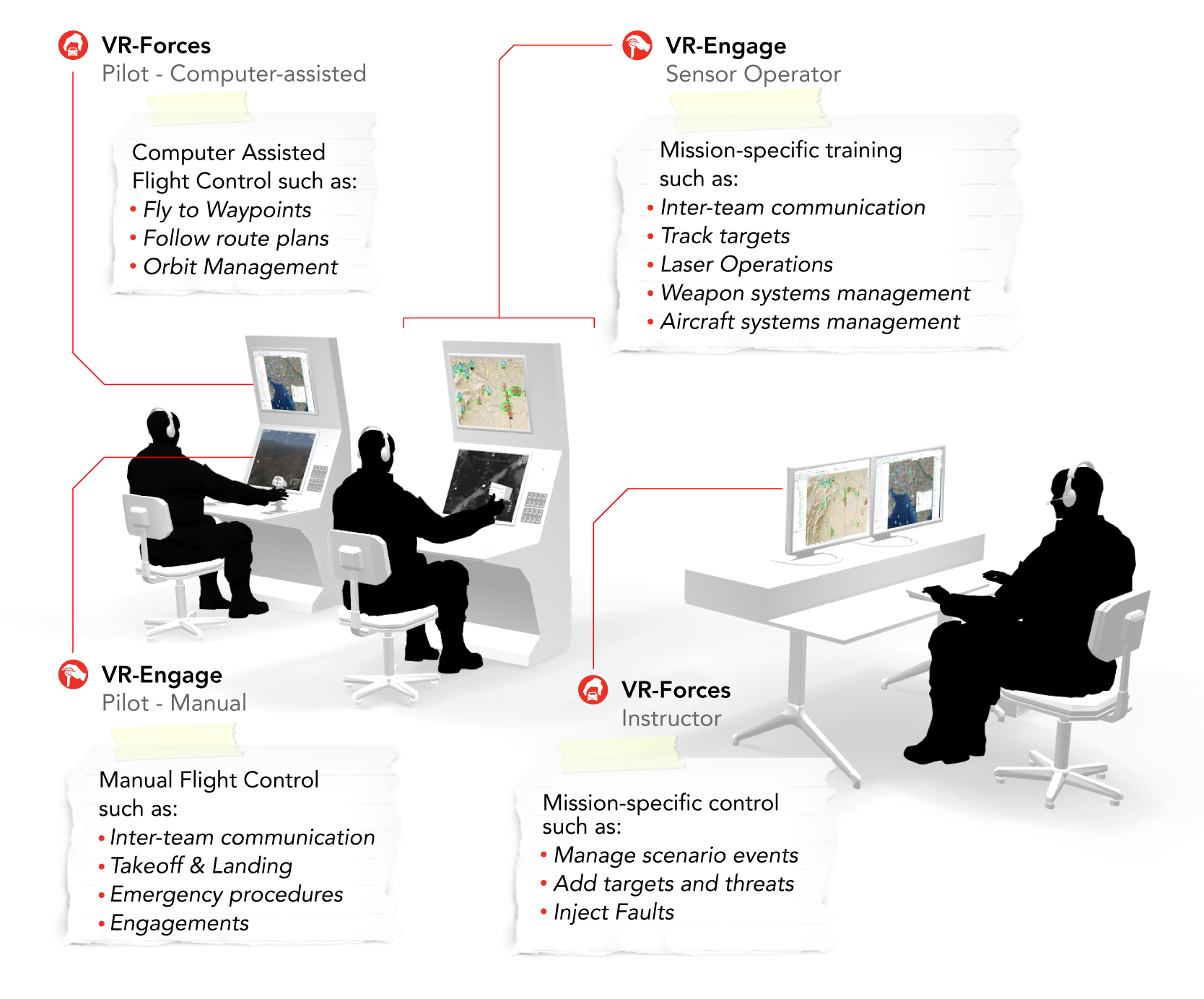

Use Case #3, Train the full airborne mission team

Before integrating with a larger mission, the Sensor and platform operators must learn to operate tactically as a Remote Piloted Aircraft unit.

These skills can be acquired while training side by side on a full-mission trainer. The stations in Figure 3 use combinations of VR-Forces and VR-Engage to fulfill the roles of the Instructor, the Pilot of the aircraft, and the Sensor Operator.

Pilot:

In the Pilot station, VR-Forces provides the Computer-Assisted flight control of the UAV.

Through the VR-Forces GUI’s 2D map interface, a user can task a UAV to fly to a specific waypoint or location, follow a route, fly a desired altitude or heading, orbit a point, and even point the sensor at a particular target or location (sometimes the pilot, rather than the Sensor Operator, will want to temporarily control the sensor). A user can also create waypoints, routes, and other control objects during flight. In addition, the VR-Forces GUI can show the footprint and frustum of the sensor to enhance situational awareness (in 2D and 3D).

VR-Engage provides manual control of the aircraft, including realistic aircraft dynamics and response to the environmental conditions. In this role, the Pilot can choose to see what the sensor sees, even share control with the Sensor Operator.

Sensor Operator:

VR-Engage provides the role of the Sensor Operator, letting the user manually control the gimbaled sensor on the platform. In this role, the Sensor Operator gains the required set of advanced skills and tactical training to become an integral part of the mission. They learn to acquire and track targets, prioritize mission-related warnings, updates, radio communications.

Instructor Station:

This is where the scenario design gets creative; the instructor can use VR-Forces to inject complexities into the scenario by using its advanced AI to create tactically significant behaviors in human characters or crowds. Tweak the clouds and fog, producing rain to change visibility. Increase wind and change its direction and even jam communications during runtime.

As students advance through full mission training they learn to support their crewmen in complex missions. They share salient information between each other, operate radios, and communicate with ground teams, rear-area commanders and other entities covering the target area.

Figure 3: Apply knowledge of systems, weapons and tactics to complete missions together

MAK products are well suited to Sensor Operator training. VR-Engage’s Sensor Operator role is ready to use and connect to existing training simulations, it can be configured and customized to emulate specific sensor controllers, and it can interoperate with the full capabilities of VR-Forces to form full mission trainers.

MAK products can be used for live, virtual and constructive training.

Reach out to us at

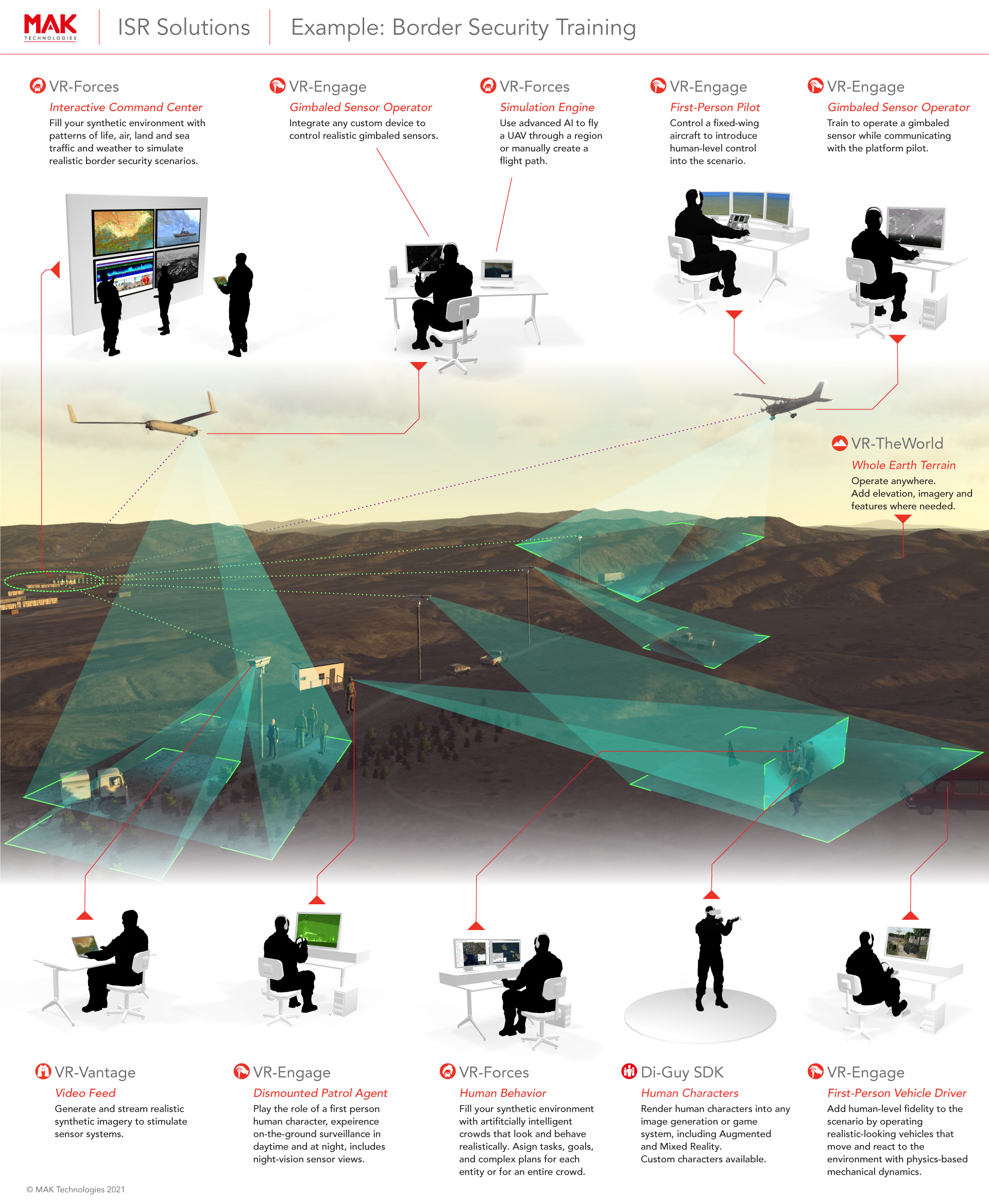

Intelligence, Surveillance, Reconnaissance Systems

MAK ONE for Intelligence, Surveillance, and Reconnaissance Systems

In this infographic, we illustrate how MAK ONE contributes to a simulation that models ISR-specific border security scenarios.

MAK’s ability to model ISR systems comes in many forms:

- VR-Engage Sensor Operator role can be used to control a gimbaled sensor while viewing the sensor imagery feed.

- The vehicle/platform carrying the sensor pod can be flown interactive using the first-person VR-Engage Pilot role.

- VR-Forces computer generated forces AI can fly aircraft along planned routes that also respond to simulation events.

- Sensors can be fixed at a location such as on a building or observation tower.

- VR-Vantage renders sensor video and streams it to real (non-simulated) systems like unmanned vehicle ground stations or image processing and analysis tools.

- At a more abstract level, VR-Forces can model scenarios where entities detect one another and send spot reports to intelligence systems.

All of these configurations benefit from MAK’s full suite of product capabilities.

- VR-Forces models scenarios involving vehicles, weapons, and human characters.

- VR-TheWorld Server provides geographic data to create the terrain databases within VR-Forces, VR-Vantage, and VR-Engage.

- DI-Guy SDK provides human character visualization to integrate into many commercial visual systems.

- MAK interoperability tools (VR-Link, MAK RTI, VR-Exchange) provide network connectivity between all the components of the ISR system.

Command & Staff Training

Command & Staff Training

MAK CST: The Low Overhead Approach to Command and Staff Training

Whether you’re conducting an exercise, providing academic instruction, or managing a local crisis, simulation plays an important role in command and staff training. Simulation models the situation to provide learning opportunities for the trainees and to simulate the command and control (C2), or Mission Command systems, they use. Simulation helps trainees and instructors plan the battle or crisis, conduct the exercise, and review the outcome.

CST Solutions

MAK’s training systems have been developed in close cooperation with the US Army, Marines, and Air Force and deployed to governments across the globe.

Air Support Operations Center (ASOC) Total Mission Training - USAF/ UKRAF

Air Support Operations Center (ASOC) Total Mission Training

What's At Stake?

The ASOC (Air Support Operations Center) Battlefield Simulation fills a crucial gap in USAF and United Kingdom Close Air Support (CAS) and airspace manager training. The system provides six squadrons with the capability to conduct total-mission training events whenever the personnel and time are available.

How MAK Helped

When the 111th ASOS returned from their first deployment to Afghanistan they realized the training available prior to deployment was inadequate. They sought an organic training capability focused on the ASOC mission that was low cost, simple to use, adaptable, and available now. With the assistance of MAK, they developed a complete training system based on MAK’s QuickStrike HLA-based simulation. Through more than two years of spiral development, incorporating lessons learned, the system has matured, and can now realistically replicate the Tactical Operations Center (TOC) in Kabul, Afghanistan, the TOC supporting the mission in Iraq, or can expand to support a major conflict scenario. The training system provides a collaborative workspace for the training audience and exercise control group via integrated software and workstations that can easily adapt to new mission requirements and TOC configurations. The system continues to mature. Based on inputs from the warfighter, new capabilities have been incorporated to add realism and simplify the scenario development process. The QuickStrike simulation can now import TBMCS Air Tasking Order air mission data and can provide air and ground tracks to a common operating picture; presented through either TACP CASS or JADOCS.

Tactical Operations Training – USMC MCTOG

Tactical Operations Training – USMC MCTOG

What's At Stake?

The U.S. Marine Corps Tactical Operations Group (MCTOG) uses advanced human simulation, ECO Sim to model blue forces, oppositional forces, and civilian pattern-of-life to train captains two weeks prior to deployment in Afghanistan. ECO Sim trains IED defeat missions simulating sophisticated human networks of financiers, bomb makers, safe houses, leaders, and emplacers. These IED networks operate within a larger backdrop of ambient civilian behavior: farmers in fields, children attending school, families going to marketplaces and religious services. The Marine captain commands searches, patrols, and detentions, all while monitoring the battlefield using ISR data provided by UAS and stationary cameras. In addition, ECO Sim has a sophisticated report capability, mimicking the way Marines will actually convey and receive information in the battlefield.

MAK CST simulates a full spectrum of operations at all levels – from squad leader through brigade commander. Whether you’re training for regular or irregular warfare, border and force protection, or civil emergency response, MAK CST provides realistic scenarios to challenge the trainees so they can hone their decision-making and communication skills.

The Right Tool for the Job

Commanders and staff officers in the field use MAK CST to practice and teach decision-making skills and processes. It works in live, constructive, or virtual simulation environments and scales from a single computer to distributed networked simulation systems with sites across the world. MAK CST can function in a stand-alone mode for training against computer generated enemies, while multi-player mode supports collaborative battlefield environments with multiple commanders and staff officers. It also allows multiple students in a classroom to practice individually under instructor guidance or on their own time.

The Cost-Effective Solution

MAK CST accurately replicates the operational decision-making environment to create a realistic, yet easy to use “experiential learning” environment in the most cost-effective way. MAK CST allows you to train for your command and control tactics, techniques, and procedures. It can provide a mock-up of a command center with simulated C2 equipment stimulated with Intel and surveillance from a contextually rich force-on-force simulation environment. It allows you to create scenarios that challenge and inform, that provide mission commanders with decision-making opportunities. It provides an environment where the entire battle staff can learn to visualize the battle space and make tactical decisions in a time-constrained and information-rich environment.

Stimulating C2 Systems

Tools and talent for developing a CST capability or integrating CST into a command & control system

MAK has a long history of working with system integrators to build command and staff training systems. We can help design systems for training commanders and staff at the brigade, battalion, and company levels using aggregate-level simulation, entity-level simulation, and individual human character simulation. Our technology and support services enable you to deliver effective training solutions for your customers.

CST Stimulation Solutions

Have a look at a few of the CST systems that MAK has helped stimulate with constructive simulation.

Wargaming System to Stimulate C4I System – Raytheon IDS

Wargaming System to Stimulate C4I System – Raytheon IDS

What’s at stake

Raytheon Integrated Defense Systems selected MAK to develop the simulation system to drive the wargaming & training component of their Command View C4I system that was subsequently delivered to a Raytheon customer.

The C4I system is used for national defense and simulated Command Post Exercises at the division, brigade, and battalion levels, providing opportunities for experimentation, doctrine development, and training.

How MAK Helped

MAK used VR-Forces' flexible architecture to develop and deliver a MAK Command and Staff Training System (MAK CST) to stimulate Raytheon’s C4I system. MAK CST acts as the synthetic environment for operational level training exercises — it feeds the C4I system with track data and reports from simulated forces.

Commanders and their staff lay down the Order of Battle and participate in the exercise using the same C4I system they would use in a real-world battle, while members of their organization direct the simulated forces by interacting with MAK CST in the simulation cell.

This two-year project resulted in MAK delivering a fully compliant system, on time, and within budget. Deliveries included documentation, training, comprehensive test procedures, and on-site integration support. The newly developed software has been integrated into the COTS VR-Forces product for ease of maintenance and upgrade. The system includes the VR-TheWorld Streaming Terrain Server.

Army Command and Staff Trainer – Dutch Army

Dutch Army Command and Staff Trainer using MAK ONE

What's At Stake?

In this use case, the trainees are military commanders who interact with the real C4I interface, which is configured to be in a training mode. Role players contribute to the simulation scenarios. And white-cell operators manage the exercise making sure the scenarios stay on track.

How MAK Helped

Working as a subcontractor to Elbit Systems, MAK delivered a modified version of VR-Forces that was used as the simulation engine for a Command and Staff Trainer for the Dutch Army. VR-Forces is used for scenario authoring, simulation execution, and as a role-player station for white-cell operators and instructors.

VR-Forces-simulated entities, and other objects, are displayed on the C4I system's map. Elbit, the developers of the C4I system, wrote a Gateway application using VR-Link and the VR-Forces Remote Control API that translates between the HLA protocols spoken by VR-Forces, and the C4I protocols used by the operational C4I system.

The Dutch system employs several VR-Forces simulation engines to share the load of simulating several thousand objects.

This project focuses on the tactical level more than the operation level. For example, it had requirements for 3D views (UAVs camera displays and "pop the hatch" out-the-window views for operators playing the role of company commanders), thus, much of the actual simulation is at the entity level rather than the more constructive true aggregate level.

Air Defense Operations – Rheinmetall Canada

Air Defense Operations Using MAK ONE for Decision-Making Training

What's At Stake?

Rheinmetall Canada delivered an Air Defense Operations Simulation (ADOS) for the Dutch Army to be used for decision-making training of the staff of the air defense units responsible for Command and Control (C2). The Dutch Army is fielding new Ground Based Air Defense Systems (GBADS) from Rheinmetall and integrating these with Short Range Air Defense (SHORAD) systems, requiring new training systems for tactical decision-making as opposed to purely operation training. The ADOS system is also being used as an aid in the development of air defense Doctrine, Tactics, Techniques, and Procedures (DTTP).

How MAK Helped

The training system comprises a simulation network connected to a copy of the operational C2 system.

VR-Forces, MAK’s simulation solution, provides scenario generation and modeling of ground and air threats and blue force including the mobile GBADS. VR-Vantage is used as the primary interface for the role players which include the Stinger Weapon Platform platoons, the Norwegian Advanced Surface to Air Missile System (NASAMS) fire units, and opposing forces.

All positions are connected via the High Level Architecture (HLA) through the MAK High Performance RTI. Students, making up the Staff platoon, are at copies of the operational C2 system. The MAK Data Logger supports After Action Review.

“MAK’s VR-Forces and VR-Vantage combination of providing out-of-the-box capabilities with a powerful API for easy customization made them the ideal choice as the simulation and visualization component of the ADOS System. The technical capabilities of MAK’s products coupled with their excellent reputation for unsurpassed customer support made the decision to team with MAK an easy one,” said Ledin Charles, ADOS Program Manager, Rheinmetall Canada.

Air Defense Battle Management – DSTO Australia

DSTO's Air Defense Battle Management with MAK ONE

What's At Stake?

The Air Defence Ground Environment Simulator (ADGESIM) was developed by the Defence, Science and Technology Organization (DSTO) to evolve Royal Australian Air Force (RAAF) training systems and prepare personnel for network-centric operations. ADGESIM, a network-centric aerospace battle management application, is a major component of the RAAF strategy to adopt distributed simulation technology.

How MAK Helped

ADGESIM is comprised of three applications developed by DSTO and YTEK (DSTO engineering contractor) in C++ and closely integrated with VR-Forces and VR-Link. The ADGESIM Pilot Interface is used to create and fly simulated aircraft entities. All high-resolution entity modeling is done in the background by VR-Forces and ADGESIM entities have been proven compatible with both Australian and US Navy distributed simulation environments. The RAAF routinely runs scenarios with eight Pilot Interface workstations simultaneously without taxing the PC-based ADGESIM system.

Jon Blacklock, Head, Air Projects Analysis, Air Operations Research Branch of DSTO, explained that “his research program had identified VR-Forces and VR-Link as the only products with a sufficiently open architecture and useable programming interface to support rapid prototyping and development. Using a mixture of MAK’s COTS tools and custom-built “thin client” applications, we were able to create ADGESIM in less than six months, for around half the original budget.”

“VR-Forces’ flexibility secured its place in our development,” said Blacklock. “The ease of customization and the API were among the biggest reasons we chose it. At the start, it lacked a few features on our wish list, but MAK’s outstanding tech support helped us fill in the gaps. During the six-month development, MAK redeveloped its applications and added features in parallel with our development. It is a testament to MAK’s professional approach that when we got the release version of VR Forces, we needed just two weeks prior to installation in the first Regional Operations center. We plugged in our applications and everything just worked.”

Experimentation with Command & Control Systems – Norwegian Defence Research Establishment (FFI)

Norwegian Defence Research Establishment uses VR-Forces for CST Experimentation

What's At Stake?

The Norwegian Defence Research Establishment (FFI) has established a demonstrator for experimentation with command and control information system (C2IS) technology. The demonstrator is used for studying middleware, different communication media, legacy information systems, and user interface equipment employed in C2ISs.

How MAK Helped

MAK’s VR-Forces, computer generated forces simulation toolkit is a component of the demonstrator serving as the general framework for rapid development of synthetic environments. It is used for describing the scenario and representing the behavior of most of the entities in the environment. The demonstrator’s flexible and extendable HLA-based synthetic environment supports VR-Forces participation as a federate in the HLA federation, allowing it to exchange data with entities and systems represented in external simulation models.

Networking Simulation Environments to C2+ Systems

USAF Networking Simulation to C2 Systems with MAK ONE

What's At Stake?

Large investments have been made in developing simulation systems that model and simulate various aspects of the military environment. Networking these together enables building systems-of-systems that model larger and more complete operational scenarios. Connecting these to operational C2, C3, C4I, and Mission Command systems allows commanders and their staff to have experiential learning opportunities that otherwise could not be achieved outside of actual conflict.

How MAK Helped

MAK’s VR-Link interoperability toolkit is the de facto industry standard for networking simulations. MAK’s implementation of the HLA run time infrastructure (RTI) has been certified for HLA 1.3 and HLA 1516, and is currently being certified for HLA 1516:2010. The MAK RTI has been used in many federations, some with over a thousand federates and tens of thousands of simulated entities. MAK’s universal translator, VR-Exchange, has been sold as a COTS product since 2005 and was first developed for and used on the US Army Test and Evaluation Command (ATEC) Distributed Test Event 2005 (DTE5) linking live, virtual and constructive simulations for the first time. Since then it has been used on many programs and at many sites to link together live (TENA), virtual (HLA), and constructive (DIS) simulations.

MAK has interfaced Battle Command to operational Command and Control Systems including C2PC, Cursor on Target, and JADOCS for the USMC and USAF. Previously we developed an interface for FBCB2.

The US Air Force selected MAK interoperability products for use on the Air Force Modeling and Simulation Training Toolkit (AFMSTT) program and we have successfully completed delivery. The MAK tools include the new MAK WebLVC Server, VR-Exchange, VR-Link and MAK Data Logger. Based on the Air Force's Air Warfare Simulation (AWSIM) model, the AFMSTT system enables training of senior commanders and staff for joint air warfare and operations. MAK's tools are being used to help migrate the AFMSTT system to a service-oriented architecture based on High Level Architecture (HLA) interoperability and web technologies.

Three ways MAK can help your CST system:

- Use MAK’s COTS products—the MAK ONE suite of software—and standard support to develop your own CST solution. Our products will reduce cost, time, and risk on your project and provide a solid foundation for a CST system. Many customers use MAK’s products out of the box to meet their CST requirements. However, our open toolkits allow you to add your own content, modify the software as required, and continue to maintain and enhance the system going forward.

- Purchase additional MAK services such as customized software, training, and on-site integration support. We are experts in system design, simulation networking via HLA and DIS, interfacing to operational C2 systems, developing customized user interfaces, and adding user-specific simulation content.

- Contract MAK to deliver a complete turnkey solution based on your customer’s specific requirements. Read the battle-tested use cases below.

Our training systems can be run stand-alone or connected to operational Command and Control Systems. Our simulation technology natively uses HLA/DIS to interoperate with embedded and remote simulation systems, as well as live training systems.

We embrace modern IT architectures including thin clients, web delivery, cloud distribution of data, and mobile devices.

Power and Flexibility

Our technology provides a strong technical foundation for your project

- Modeling of mobility, logistics, engagement, combat engineering, sensors, communications, and the environment, including NBC, weather, and terrain

- Flexible scenario authoring and execution GUIs with 2D tactical map displays, 3D scenes, advanced artificial intelligence, and Master Scenario Event List (MSEL) Editor

- Built-in AAR support

- Loads standard GIS data and maps on which to build your scenarios

- Native HLA compliance and ability to stimulate external C4I systems

- Editors to create new force structures, script new behaviors, and create complex tasks

- Fully-documented and supported software development kits (SDK) for user customization and integration

Shipboard Weapons Training System

Shipboard Weapons Training System

This Shipboard Weapons Training System immerses trainees within a virtual environment. Students learn to operate the weapons along with team communication and coordination.

Related Use Case - French Navy's ship defense simulators create an immersive environment allowing personnel to train and practice shipboard defense against any kind of threat.

Shipboard weapons training systems focus on developing coordination and firing skills amongst a gunnery unit at sea. When it comes to appropriate levels of fidelity, it is important to develop a system that replicates the firing process, accurately renders weapon effects, and instills the environmental accuracy of operating on a ship in motion. In addition, the training system must stimulate the unit with appropriate threats.

MAK’s off-the-shelf technologies transform this system into a realistic simulation environment, providing an ideal training ground for a large variety of training skills and learning objectives.

(For narration, make sure the volume is on)

The MAK Advantage:

Choosing MAK for your simulation infrastructure gives you state-of-the-art technology and the renowned ‘engineer down the hall’ technical support that has been the foundation of MAK’s culture since its beginnings.

MAK Capabilities within each of the simulation components:

The Dome and the UAV station — VR-Vantage

VR-Vantage

- Game/Simulator Quality Graphics and Rendering Techniques take advantage of the increasing power of NVIDIA graphics cards, yielding stunning views of the ocean, the sky, the content between in between.

- Built in Support for Multi-Channel Rendering. Depending on system design choices for performance and number of computers deployed, VR-Vantage can render multiple channels from a single graphics processor (GPU) or can render channels on separate computers using Remote Display Engines attached to a master IG channel.

- 3D Content to Represent many vehicle types, human characters, weapon systems, and destroyable buildings. Visual effects are provided for weapons engagements including particle systems for signal smoke, weapon fire, detonations, fire, and smoke.

- Terrain Agility supports most of the terrain strategies commonly used in the modeling, simulation & training industry.

- Environmental effects are modeled to render with realism. Proper lighting — day or night, the effects of shadows, atmospheric and water effects including dynamic oceans (tidal, swell size and direction, transparency, reflection, etc.) Add multiple cloud layers, wind, rain, snow, even trees and grass that move naturally with the variations of wind.

- Sensor Modeling the look and feel of the UAV sensor feed. Sensors can be controlled by a participant or given an artificial intelligence plan. Add SensorFX to model physically accurate views from a UAV's sensor, accounting to environmental variations, such as fog, due, snow, rain, and many other factors that influence temperature and visibility.

First-person Helicopter Flight Simulator —  VR-Engage

VR-Engage

- A high-fidelity vehicle physics engine needed for accurate vehicle motion.

- Ground, rotary and fixed-wing vehicles, and the full library of friendly, hostile, and neutral

DI-Guy characters.

DI-Guy characters. - Radio and voice communications over DIS and HLA using

Link products.

Link products. - Sensors, weapons, countermeasures, and behavior models for air-to-air, air-to-ground, on-the-ground, and person-to-person engagements.

- Vehicle and person-specific interactions with the environment (open and close doors, move, destroy, and so on.)

- Standard navigation displays and multi-function display (MFD) navigation chart.

- Terrain agility. As with VR-Vantage and VR-Forces, you can use the terrain you have or take advantage of innovative streaming and procedural terrain techniques.

Instructor Operator Station —  VR-Forces and

VR-Forces and  VR-Engage

VR-Engage

VR-Forces is a powerful, scalable, flexible, and easy-to-use computer generated forces (CGF) simulation system used as the basis of Threat Generators and Instructor Operator Stations (IOS). VR-Forces provides the flexibility to fit a large variety of architectures right out of the box or to be completely customized to meet specific requirements.

VR-Engage lets the instructor choose when to play the role of a first-person human character; a vehicle driver, gunner, or commander; or the pilot of an airplane or helicopter. The instructor takes interactive control of the jet in real-time, engaging with other entities using input devices. This adds the human touch for a higher behavioral fidelity when needed.

Some of VR-Forces’ many capabilities:

- Scenario Definition that enables instructors to create, execute, and distribute simulation scenarios. Using its intuitive interfaces, they can build scenarios that scale from just a few individuals in close quarters to large multi-echelon simulations covering the entire theater of operations. The user interface can be used as-is or customized for a training-specific look and feel.

- Simulating objects' behavior and interactions with the environment and other simulation objects. These behaviors give VR-Forces simulation objects a level of autonomy to react to the rest of the simulation on their own. This saves you from having to script their behavior in detail.

- It's easy to set up and preserve your workspace, override default behaviors, and modify simulation object models. Simulation objects have many parameters that affect their behavior.

- Training Exercise Management, allowing the instructor to manipulate all entities in real-time while the training is ongoing.

- Artificial Intelligence (AI) control, where entities are given tasks to execute missions, like attack plans that trigger when the ship reaches a certain waypoint.. While on their mission reactive tasks deal with contingencies and the CGF AI plays out the mission.

- 2D & 3D Viewing Control that allows the instructor to switch and save their preferred point of views in real-time.

- High-fidelity weapons interactions components are plugged in for accurate measures in real time.

Want to learn about trade-offs in fidelity? See The Tech-Savvy Guide to Virtual Simulation?

Air Mission Operations Training Center

Air Mission Operations Training Center

Air Mission Operations Training Centers are large systems focused on training aircraft pilots and the teams of people needed to conduct air missions.

To make the simulation environment valid for training, simulators are needed to fill the virtual world with mission support units, opposing forces & threats, and civilian patterns of life. Depending on the specifics of each training exercise, the fidelity of each simulation can range from completely autonomous computer generated forces, to desktop role-player stations, to fully immersive training simulators.

Scroll down to watch the video on how MAK’s simulation technology fits into an air missions operations training center.

Click in the bottom corner of the video for volume control and full-screen viewing.

The MAK Advantage:

MAK technologies can be deployed in many places within an air missions operations training center.

VT MAK provides a powerful and flexible computer generated forces simulation, VR-Forces. Used to manage air, land, and sea missions, as well as civilian activity. It can be the ‘one CGF’ for all operational domains.

Desktop role players and targeted fidelity simulators are used where human players are needed to increase fidelity and represent tactically precise decision-making and behavior.

Remote simulation centers connect over long-haul networks to participate when specific trials need the fidelity of those high-value simulation assets. MAK offers an interoperability solution that facilitates a common extensible simulation architecture based on international standards. VR-Link helps developers build DIS & HLA into their simulations. VR-Exchange connects simulations even when they use differing protocols. The MAK RTI provides the high-performance infrastructure for HLA networking.

Local simulators, based on MAK’s VR-Engage, take the place of remote simulations — when connecting to remote facilities is not needed. VR-Engage lets users play the role of a first-person human character; a vehicle driver, gunner, or commander; or the pilot of an airplane or helicopter.

VR-Engage can be used for role-player stations. Or used as the basis for targeted fidelity or high-fidelity simulators.

MAK products are meant to be used in complex simulation environments — interoperating with simulations built by customers and other vendors. However, big efficiencies are gained by choosing MAK products as the core of your simulation environment.

Want to learn about trade-offs in fidelity? See The Tech-Savvy Guide to Virtual Simulation?

Interested to see a demonstration?

Flight Deck Safety Training

Flight Deck Training

What’s at stake?

Working on the flight deck of an aircraft carrier is dangerous business. Especially if your job is in “the bucket” and you’re supposed to release the catapult to accelerate a jet down the deck to aid its take off. You need to set the tension on the catapult after you get the weight from the refueling crew, you need to get the thumbs up from the deck chief, and finally make sure that the pilot is focused on the runway and ready. If you let that catapult go too soon, it's going to hurt – a lot.

The MAK Advantage

MAK has the tools you need to develop a training system to teach flight deck safety procedures.

- DI-Guy Scenario can create human character performances that model the activities on the flight deck.

- With the DI-Guy SDK, you can integrate the performances into your image generator, or you can use VR-Vantage, which has DI-Guy built in.

- If you need special gestures to meet your training requirements, you can use the DI-Guy Motion Editor to customize the thousands of character appearances that come with all DI-Guy products. Or you can create characters from motion capture files.

- If your training requires the detail of specific facial expressions, then DI-Guy Expressive Faces will plug right in and give you the control you need.

- And if you’d like help pulling this all together. MAK is here to help. With renowned product support and custom services to ensure your training system is a success.

Mechanized Infantry Training

Close Air Support: JTAC Training

Close Air Support: JTAC Training

As part of a Tactical Air Control Party (TACP), only the Joint Terminal Air Controller (JTAC) is authorized to say CLEARED HOT on the radio and direct aircraft to deliver their ordnance on a target.

JTACs are relied on to direct and coordinate close air support missions, advise commanders on matters pertaining to air support, and observe and report the results of strikes. Their ability to communicate effectively with pilots and coordinate accurate air strikes can play a huge role in the success of a mission.

Virtual training systems allow JTACs to practice identifying targets, calibrating their locations, requesting air support, and the highly-specialized procedures for communicating with pilots.

Scroll down to watch the video on how MAK’s simulation technology comes together to make up a JTAC simulator.

The MAK Advantage:

The JTAC simulator in this use case takes advantage of simulations built on MAK’s core technologies.

The tight coupling of system components provides a rich simulation environment for each participant. The JTAC simulation is rendered in the dome using VR-Vantage; the flight simulation takes advantage of the VR-Forces first-person simulation engine; and the instructor/role player station uses VR-Forces CGF to populate the synthetic environment and control the training scenarios.

All these system components share a common terrain database and are connected together using VR-Link and the MAK RTI, giving the system integrator the ability to deploy reliably and cost effectively while leaving open the opportunity to expand the system to add bigger and more complex networks of live, virtual and/or constructive simulations.

Choosing MAK for your simulation infrastructure gives you state-of-the-art technology and the renowned ‘engineer down the hall’ technical support that has been the foundation of MAK’s culture since its beginnings.

Capabilities the core technologies bring to the simulators:

JTAC Dome — Built with VR-Vantage

- Game/Simulator Quality Graphics and Rendering Techniques - VR-Vantage uses the most modern image rendering and shader techniques to take advantage of the increasing power of NVIDIA graphics cards. VT MAK's Image Generator has real-time visual effects to rival any modern IG or game engine.

- Multi-Channel Rendering - Support for multi-channel rendering is built in. Depending on system design choices for performance and number of computers deployed, VR-Vantage can render multiple channels from a single graphics processor (GPU) or can render channels on separate computers using Remote Display Engines attached to a master IG channel.

- 3D Content to Represent Players and Interactions - VR-Vantage is loaded with content including 3D models of all vehicle types, human characters, weapon systems, and destroyable buildings. Visual effects are provided for weapons engagements including particle systems for signal smoke, weapon fire, detonations, fire, and smoke.

- Terrain Agility - All MAK’s simulation and visualization products are designed to be terrain agile, which means that they can support most of the terrain strategies commonly used in the modeling, simulation & training industry. Look here for technical details and a list of the formats supported.

- Environmental Modeling - VR-Vantage can render scenes of the terrain and environment with the realism of proper lighting — day or night, the effects of illuminated light sources and shadows, atmospheric and water effects including multiple cloud layers effects and dynamic oceans, trees and grass that move naturally with the wind.

- Sensor Modeling - VR-Vantage can render scenes in all wavelengths: Night vision, infrared, and visible (as needed on a JTACs dome display). Sensor zooming, depth of field effects, and reticle overlays model the use of binoculars and laser range finders.

Flight Simulator — Built with VR-Forces & VR-Vantage

- Flight Dynamics - High-fidelity physics-based aerodynamics model for accurate flight controls using game- or professional-level hands-on throttle and stick controls (HOTAS).

- Air-to-Ground Engagements - Sensors (targeting pod (IR camera with gimbal and overlay), SAR request/response (requires RadarFX SAR Server) Weapons (missiles, guns, bombs)

- Navigation - Standard six-pack navigation displays and multi-function display (MFD) navigation chart.

- Image Generator - All the same VR-Vantage-based IG capabilities in a flight simulator/roleplayer station as in the JTAC’s dome display. The flexibility to configure as needed: Single screen (OTW + controls + HUD), Dual screen (OTW + HUD, controls), Multi-Screen OTW (using remote display engines).

- Integration with IOS & JTAC - The flight simulator is integrated with the VR-Forces-based IOS so the instructor can initialize the combat air patrol (CAP) mission appropriately in preparation for the close air support (CAS) mission called by the JTAC. All flights are captured by the MAK Data Logger for after-action review (AAR) analysis and debriefing. Radios are provided that communicate over the DIS or HLA simulation infrastructure and are recorded by the MAK Data Logger for AAR.

Instructor Operator Station — Built with VR-Forces

VR-Forces is a powerful, scalable, flexible, and easy-to-use computer generated forces (CGF) simulation system used as the basis of Threat Generators and Instructor Operator Stations (IOS).

- Scenario Definition - VR-Forces comes with a rich set of capabilities that enable instructors to create, execute, and distribute simulation scenarios. Using its intuitive interfaces, they can build scenarios that scale from just a few individuals in close quarters to large multi-echelon simulations covering the entire theater of operations. The user interface can be used as-is or customized for a training-specific look and feel.

- Training Exercise Management - All of the entities defined by a VR-Forces scenario can be interactively manipulated in real-time while the training is ongoing. Instructors can choose from:

- Direct control, where new entities can be created on the fly or existing entities can be moved into position, their status, rules of engagement, or tasking changed on a whim. Some call the instructor using this method a “puckster”.

- Artificial Intelligence (AI) control, where entities are given tasks to execute missions, like close air support (CAS), suppressive fire, or attack with guns. While on their mission reactive tasks deal with contingencies and reactive tasks deal and the CGF AI plays out the mission. In games, these are sometimes called “non-player characters”.

- First-person control, where the instructor takes interactive control of a vehicle or human character and moves it around, and engages with other entities using input devices.

- 2D & 3D Viewing Control - When creating training scenarios, the VR-Forces GUI allows instructors to quickly switch between 2D and 3D views.

- The 2D view provides a dynamic map display of the simulated world and is the most productive for laying down entities and tactical graphics that help to control the AI of those entities.

- The 3D views provide an intuitive, immersive, situational awareness and allow precise placement of simulation objects on the terrain. Users can quickly and easily switch between display modes or open a secondary window and use a different mode in each one.

Want to learn about trade-offs in fidelity? See The Tech-Savvy Guide to Virtual Simulation?

Interested to see a demonstration?

Air Traffic Simulation

Bringing Advanced Traffic Simulation and Visualization Technologies to Help Design and Build the Next-Generation ATM System

The U.S. Federal Aviation Administration (FAA) is embarking on an ambitious project to upgrade the nation’s air traffic management (ATM) systems. Each team under the FAA Systems Engineering 2020 (SE2020) IDIQ will need an integrated, distributed modeling and synthetic simulation environment to try out their new systems and concepts. Aircraft manufacturers will need to upgrade their aircraft engineering simulators to model the new ATM environment in order to test their equipment in realistic synthetic environments.

The MAK Advantage:

MAK saves our customers time, effort, cost, and risk by applying our distributed simulation expertise and suite of tools to ATM simulation. We provide cost-effective, open solutions to meet ATM visualization and simulation requirements, in the breadth of application areas:

- Concept Exploration and Validation

- Control Tower Training

- Man-Machine Interface Research

- System Design (by simulating detailed operation)

- Technology Performance Assessment

MAK is in a unique position to help your company in this process. Many players in the aviation space already use MAK products for simulation, visualization, and interoperability. For the ATM community we can offer:

- Simulation Integration and Interoperability. The US has standardized on the High Level Architecture (HLA) protocol for interoperability and the MAK RTI is already in use as part of the AviationSimNet. We can provide general distributed simulation interoperability services, including streaming terrain (VR-TheWorld Server, GIS Enabled Modeling & Simulation), gateways to operational systems (VR-Exchange), simulation debugging and monitoring (HLA Traffic Analyzer).

- Visualization. MAK’s visual simulation solution, VR-Vantage, can be used to create 3D representations of the airspace from the big picture to an individual airport. VR-TheWorld can provide central terrain storage.

- Simulation. VR-Forces is an easy-to-use CGF used to develop specific models for non-commercial aviation entities such as UAVs, fighter aircraft, rogue aircraft, people on the ground, ground vehicles, etc. In addition, as an open toolkit, VR-Forces may well be preferred in many labs over the closed ATC simulators.

Drone Ops

UAS Operations

What’s at Stake?

You are tasked with training a team of sensor payload operators to use UAVs for urban reconnaissance missions in a specific city. Upon completion of training, trainees must be able to comb an area for a target, make a positive identification, monitor behavior and interactions, radio in an airstrike, and then report on the outcome.

An ineffective training environment could lead to additional costs, losing important targets, and inefficient surveillance systems. Training with a robust solution enhances homeland security human resources for minimal product investment.

What Are We building?

As the instructor, you need to mock up a ground control station with accurate pilot/payload operator role definitions and supply that system with surveillance data from a content-rich simulation environment. You need to construct a scene that is informative while providing trainees with opportunities to develop their instincts and test their operating procedures based on how the scenario unfolds.

Each UAV must be equipped with an electro-optical camera as well as an infrared sensor mounted to a gimbal. Radio communication between the UAV operators and a central command center must be available to coordinate surveillance and call in airstrikes.

Trainees need to experience the scenario through the electro-optical sensor and infrared sensor with rich, accurate data overlays to provide them with the information they need to communicate positioning and targeting effectively.

Your urban environment requires crowds of people who behave in realistic ways and traverse the city in intelligent paths. When a UAV operator spots someone, they need to be able to lock onto them when they are in motion to mimic algorithmic tracking tools.

The simulation needs to be adjustable in real-time so that the instructor can minimize repeat behaviors and walk the team through different scenarios. Instructors also must be able to judge the effectiveness of a trainee’s technique.

The MAK Advantage:

In this particular case,![]() VR-Forces provides all the software you need to bring your environment to life.

VR-Forces provides all the software you need to bring your environment to life.

Watch this MAKtv episode to see how easy it is to set up.

Sensor modeling is a point of strength for VR-Forces. Give your trainees a beautiful, detailed point of view of the scene through the electro-optical sensor, and provide a high-fidelity infrared sensor display when the daylight fades. VR-Forces adds accurate data overlays so that trainees can learn to quickly and accurately read and report based on that information. Instructors can visualize 3D volumetric view frustums and assess trainees’ combing strategies as well as any gaps in coverage, and engineer surveillance systems. We model sensor tracking to lock onto targets while they are in movement or on a fixed location.

VR-Forces is an ideal tool for scenario development. It can model UAVs in fine detail, while allowing for instructors to customize those entities based on the scope of a mission. It’s simple to add the gimbal-mounted sensor array that we need for this scenario and define parameters for - including zoom, zoom speed, slew rate, and gimbal stops. Easily populate an urban environment with people by using the group objects function to add crowds of entities at a time. VR-Forces has features from Autodesk's Gameware built in, enabling Pattern of Life intelligent flows of people and vehicles, in addition to plotting the locations and tasks of individual entities. The Pattern of Life lets you manipulate patterns within the scenario – including realistic background traffic, whether it’s people, road, or air. Certain ![]() DI-Guy capabilities have been integrated into VR-Forces, meaning behavior modeling is more authentic, thanks to motion capture technology. Now you can train your team to look out for certain suspicious movements and calibrate their responses based on the actions of the target.

DI-Guy capabilities have been integrated into VR-Forces, meaning behavior modeling is more authentic, thanks to motion capture technology. Now you can train your team to look out for certain suspicious movements and calibrate their responses based on the actions of the target.

What really makes VR-Forces perfect for training is the ability of instructors to manipulate the scenario in real-time. You can keep your trainees from running scenarios that are too predictable by having your target enter buildings, change his mode of transportation, or actively attempt to avoid detection, all during live action.

Interested in Learning More? Have a look at the VR-Forces page for more information.

Reach out to request a personal demonstration.

Developing Air and Ground Traffic Policy

Developing Air and Ground Traffic Policy in a World Increasingly Populated by UAS

As UAS technologies become more accessible, an increase in air traffic, particularly around urban centers is inevitable. It will be essential for governments and their agencies to develop policies with regard to air traffic and its relationship with ground traffic, specifically for low-flying UASs, and particularly in emergency situations. Well-developed traffic management will maximize safe traffic speed in regular conditions and divert flows efficiently in emergency scenarios when first responders are rushing to a scene. Poor planning may result in economic and human loss. Simulation is an ideal space to test current traffic policies under changing conditions and to research and develop new solutions.

Governments and agencies need a tool that can depict an area modeled after their own and simulate air traffic within it. The tool should be capable of depicting specific types of air traffic, including planes, helicopters, and UASs, as well as airspace demarcation. There needs to be a concurrent display of ground traffic, including pedestrians, bicyclists, and vehicles - particularly around the scene of an incident. Policymakers want to be able to visualize traffic flows and craft response strategies for general and specific situations.

The MAK Advantage:

MAK offers commercial-off-the-shelf (COTS) technology to construct airspace simulations, backed by a company with an “engineer down the hall” philosophy to help organizations select and implement the most effective solution.

VR-Forces provides a scalable computer-generated forces simulation engine capable of populating an environment with air and ground traffic, as well as infrastructure specific to traffic systems. There is plenty of out-of-the-box content of all shapes and sizes, from sUAS up to 747s in the air, and everything from human characters and bicyclists to fire trucks on the ground. If an out-of-the-box model needs to be modified to match local specifications, or if an agency wants to create their own from scratch, MAK’s open-source API allows for full customization of entity appearance and performance.

VR-Forces depicts volumetric airspace regulations, giving policymakers a three-dimensional perspective of air corridors and restricted spaces as they swell and shrink. Crucially, volumetric airspace restrictions can be assigned to impact air and ground traffic systems accordingly. For example, if there was an auto accident, set policies could dictate an air restriction in the area up to a certain height to provide space for UAS emergency response and redirect UAS traffic as long as necessary. At the same time, traffic on the ground within a particular radius may have their speeds reduced, or lanes may be opened specifically for first responders to access the scene more readily.

Policymakers can calibrate the size and rules applied to air corridors and measure the impact of these changes on the traffic patterns of the city. VR-Forces is capable of depicting traffic density as it shifts with new incidents, even assigning color-coded density maps to better visualize areas of congestion in air and on the ground.

VR-TheWorld allows policymakers to test these impacts inside any city for which they have the terrain data, through a web-based interface. This creates the most realistic testing lab for research and development projects.

Want to learn more? Have a look at the VR-Forces page for more information. Interested in seeing a demonstration?

Incident Management

Incident Management

Using the Power of Modeling & Simulation for First Responder Training, Emergency Response Preparedness, and Critical Infrastructure Protection

Homeland security, emergency response, and public safety communities face challenges similar to those dealt with in the military domain--they need to plan and train. But large-scale live simulations are simply too disruptive to be conducted with regularity. Catastrophic emergencies require coordination of local and state public safety personnel, emergency management personnel, the National Guard, and possibly regular military. Interoperability is a major problem.

On a basic level, simulations require generic urban terrains with multi-story interior models, transportation infrastructure such as subways and airports, and the ability to simulate crowd behaviors and traffic. They may require terrains for specific urban areas or transportation infrastructure. Given the role of ubiquitous communications in the public sector, the ability to simulate communications networks (landline, cell, data) and disruptions in them may also be important. For specialized emergency response training, the ability to simulate chemical, biological, and radiological dispersion may also be necessary.

The need for simulation and training in this domain is self-evident. The budgetary constraints are daunting for many agencies. The cost-effective solutions that MAK has developed for the defense community can provide immediate benefits to homeland security, emergency response, and public safety agencies.

- First Responder Training

- Emergency Response Planning

- Perimeter Monitoring/Security

- Human Behavior Studies

The MAK Advantage:

MAK can help you use simulation systems to keep your homeland secure. Here's how:

- With VR-Link, VR-Exchange and MAK RTI: Link simulation components into simulation systems, or connect systems into worldwide interoperable distributed simulation networks.

- With VR-Forces: Build and Populate 3D simulation environments (a.k.a. virtual worlds), from vehicle or building interiors to urban terrain areas, to the whole planet. Then Simulate the mobility, dynamics and behavior of people and vehicles; from individual role players to large-scale simulations involving tens of thousands of entities.

- With VR-Vantage: Visualize the simulation to understand analytical results or participate in immersive experiences.

Instructor Operator Stations (IOS)

Instructor Operator Stations

Where does the Instructor Operator Station fit within the system architecture?

Training events are becoming larger and more widely distributed across networked environments. Yet staffing for these exercises is often static, or even decreasing. Therefore, instructors and operators need IOS systems to help manage their tasks, including designing scenarios, running exercises, providing real-time guidance and feedback, and conducting AAR.

Instructor Operator Stations (IOS) provide a central location from which instructors and operators can manage training simulations. An effective IOS enables seamless control of exercise start-up, execution, and After Action Review (AAR) across distributed systems. It automates many setup and execution tasks and provides interfaces tailored to the simulation domain for tasks that are done manually.

How does MAK software fit within the Instructor Operator Station?

MAK has proven technologies that allow us to build and customize an IOS to meet your training system requirements.

- Simulation Control Interface – Instructors can create and modify training scenarios. Execution of the scenarios may be distributed across one or more remote systems. The instructor or operator can dynamically inject events into a scenario to stimulate trainee responses, or otherwise guide a trainee’s actions during a training exercise. Core technology: VR-Forces Graphical User Interface

- Situational Awareness – The MAK IOS includes a 2D tactical map display, a realistic 3D view, and an eXaggerated Reality (XR) 3D view. All views support scenario creation and mission planning. The 3D view provides situational awareness and an immersive experience. The 2D and XR views provide the big-picture battlefield-level view and allow the instructor to monitor overall performance during the exercise. To further the instructor’s understanding of the exercise, the displays include tactical graphics such as points and roads, entity effects such as trajectory histories and attacker-target lines, and entity details such as name, heading, and speed. Core technology: VR-Vantage.

- Analysis & After Action Review – The MAK IOS supports pre-mission briefing and AAR / debriefing. It can record exercises and play them back. The instructor can annotate key events in real-time or post-exercise, assess trainee performance, and generate debrief presentations and reports. The logged data can be exported to a variety of databases and analysis tools for data mining and performance assessment. Core technology: MAK Data Logger.

- Open Standards Compliance –MAK IOS supports the High Level Architecture (HLA) and Distributed Interactive Simulation (DIS) protocols. Core technology: VR-Link networking toolkit.

- Simulated Voice Radios – Optionally Includes services to communicate with trainees using real or simulated radios, VOIP, or text chat, as appropriate for the training environment.

Scenario Generators

Scenario/Threat Generators

Where does the Scenario/Threat Generator fit within the system architecture?

Your job is to place a trainee or analyst in a realistic virtual environment in which they can train or experiment. It could be a hardware simulator, a battle lab, or even the actual equipment, configured for simulated input. Then you need to stimulate that environment with realistic events for the trainee to respond to. The stimulation usually comes from a scenario generator, also known as a threat generator. A scenario generator typically simulates the opposing force entities and complementary friendly force entities that the trainees need to interact with.

Trends

A scenario generator should allow training staff to quickly and easily design and develop scenarios that place trainees in a realistic situation. The application should use the proper terminology and concepts for the trainees’ knowledge domain. It should be flexible enough to handle the entire spectrum of simulation needs. The entities simulated by the scenario generator should be able to operate with enough autonomy that once the simulation starts they do not need constant attention from an instructor / operator, but could be managed dynamically if necessary.

In addition to its basic capabilities, a scenario generator needs to be able to communicate with the simulator and other exercise participants using standard simulation protocols. It needs to be able to load the terrain databases and entity models that you need without forcing you to use some narrowly defined or proprietary set of formats. Finally, a scenario generator needs to work well with the visualization and data-logging tools that are often used in simulation settings.

How does MAK software fit within the Scenario/Threat generator?

MAK will work with you so that you have the Scenario Generator that you need - a powerful and flexible simulation component for generating and executing battlefield scenarios. MAK will work with you to customize it to meet the particular needs of your simulation domain. For example, higher fidelity models for a particular platform can be added, new tasks can be implemented, or the graphical user interface can be customized to create the correct level of realism. Features include:

- Scenario development – Staff can rapidly create and modify scenarios. Entities can be controlled directly as the scenario runs. Core Technology: VR-Forces

- Situational Awareness – The visualization system includes a 2D tactical map display, a realistic 3D view, and an eXaggerated Reality (XR) 3D view. All views support scenario creation and mission planning. The 3D view provides situational awareness and an immersive experience. The 2D and XR views provide the big-picture battlefield-level view and allows the instructor to monitor overall performance during the exercise. To further the instructor’s understanding of the exercise, the displays include tactical graphics such as points and roads, entity effects such as trajectory histories and attacker-target lines, and entity details such as name, heading, and speed. Core technology: VR-Vantage.

- Network modeling – The lab can simulate communications networks and the difficulties of real-world communications. Core technologies: Qualnet eXata and AGI SMART.

- Correlated Terrain – MAK’s approach to terrain, terrain agility, ensures that you can use the terrain formats you need when you need them. We can also help you develop custom terrains and can integrate them with correlated terrain solutions to ensure interoperability with other exercise participants. Core technologies: VR-TheWorld Server, VR-inTerra.

- Sensor modeling – The visualization component can model visuals through different sensor spectrums, such as infrared and night vision. Core technology: JRM SensorFX.

- Open Standards Compliance – VR-Forces supports the High Level Architecture (HLA), Distributed Interactive Simulation (DIS).

Sensor Operator Station

Sensor Operator Station

Using the new Sensor Operator capability, a VR-Engage user can perform common surveillance and reconnaissance tasks such as tracking fixed and moving targets - using a simulated E/O camera or IR sensor, with configurable informational overlays. Immediately control the gimbaled sensor using joysticks or gamepads; or configure VR-Engage to work with sensor-specific hand controller devices. VR-Engage has built-in support for HLA/DIS radios, allowing sensor operators to communicate with pilots, ground personnel, or other trainees or role players using standard headsets.

VR-Engage's new Sensor Operator capability can fit into your larger simulation environment in a number of different ways to help meet a variety of training and experimentation requirements:

- Attach a gimbaled sensor to any DIS or HLA entity, such as a UAV, ship, or manned aircraft - even if the entity itself is simulated by an existing 3rd party application.

- When VR-Engage is used in conjunction with VR-Forces CGF, a role player can take manual control of a camera or sensor that has been configured on a VR-Forces entity.

- For a full UAV Ground Control Station, use VR-Forces GUI to "pilot" the aircraft by assigning waypoints, routes, and missions; while using VR-Engage's Sensor Operator capability to control and view the sensor on a second screen.

- Execute a multi-crew aircraft simulation using two copies of VR-Engage - one for the pilot to fly the aircraft using a standard HOTAS device or gamepad; and a second for the Sensor Operator.

- Place fixed or user-controllable remote cameras directly onto the terrain, and stream the resulting simulated video into real security applications or command and control systems using open standards like H.264 or MPEG4.

VR-Engage comes with MAK's built-in CameraFX module which allows you to control blur, noise, gain, color, and many other camera or sensor post-processing effects. The optional SensorFX add-on can be used to increase the fidelity of an IR scene - SensorFX models the physics of light and its response to various materials and the environment, as well as the dynamic thermal response of engines, wheels, smokestacks, and more.

Virtual Simulators

Virtual Simulators

Where do Virtual Simulators fit within the system architecture?

Virtual simulators are used for many different roles within Training, Experimentation, and R&D systems.

- Trainees – The primary interface for training vehicle and weapons operations, techniques, tactics, and procedures, is often a Virtual Simulator. Pilots use flight simulators, ground forces use driving and gunnery trainers, soldiers use First Person Shooter simulators, etc.

- Test Subjects – Virtual Simulators are used to test and evaluate virtual prototypes, to study system designs, or to analyze the behavior of operators.

- In either case, the Virtual Simulator is connected to other simulators, instructor operator stations, and analysis tools using a distributed simulation network. Collectively these systems present a rich synthetic environment for the benefit of the trainee or researcher.

- Role Players – Scenario Generators, Computer Generated Forces, Threat Generators, or any other form of artificial intelligence (AI) can add entities to bring the simulated environment to life. But, as good as AI can be, some exercises need real people to control supporting entities to make the training or analysis accurate. In these cases, Virtual Simulators can be used to add entities to the scenarios.

The fidelity of Virtual Simulators can vary widely to support the objectives within available budgets. Download the Tech Savvy Guide here, which goes into great detail about the fidelity of Virtual Simulators.

How does MAK software fit within a Virtual Simulator?

- Multi-role Virtual Simulator – MAK's

VR-Engage is a great place to start. We've done the work of integrating high-fidelity vehicle physics, sensors, weapons, first-person controls, and high performance game-quality graphics. VR-Engage lets users play the role of a first-person human character; a vehicle driver, gunner or commander; or the pilot of an airplane or helicopter. Use it as is, or customize it to the specifications of your training or experimentation. As with VR-Vantage and VR-Forces, VR-Engage is terrain agile so you can use the terrain you have or take advantage of innovative streaming and procedural terrain techniques.

VR-Engage is a great place to start. We've done the work of integrating high-fidelity vehicle physics, sensors, weapons, first-person controls, and high performance game-quality graphics. VR-Engage lets users play the role of a first-person human character; a vehicle driver, gunner or commander; or the pilot of an airplane or helicopter. Use it as is, or customize it to the specifications of your training or experimentation. As with VR-Vantage and VR-Forces, VR-Engage is terrain agile so you can use the terrain you have or take advantage of innovative streaming and procedural terrain techniques. - VR-Engage can run standalone - without requiring any other MAK products and is fully interoperable with 3rd party CGFs and other simulators through open standards. But many additional benefits apply when VR-Engage is used together with MAK’s

VR-Forces – Immediately share and reuse existing terrain, models, configurations, and other content across VR-Forces, VR-Vantage, and VR-Engage - with full correlation; Unified scenario authoring and management; and run-time switching between player-control and AI control of an entity.

VR-Forces – Immediately share and reuse existing terrain, models, configurations, and other content across VR-Forces, VR-Vantage, and VR-Engage - with full correlation; Unified scenario authoring and management; and run-time switching between player-control and AI control of an entity. - Visual System – If you have your own vehicle simulation and need immersive 3D graphics, then

VR-Vantage is the tool of choice for high-performance visual scenes for out-the-window visuals, sensor channels, and simulated UAS video feeds. VR-Vantage can be controlled through the Computer Image Generator Interface (CIGI) standard as well as the High Level Architecture (HLA) and Distributed Interactive Simulation (DIS) protocols.

VR-Vantage is the tool of choice for high-performance visual scenes for out-the-window visuals, sensor channels, and simulated UAS video feeds. VR-Vantage can be controlled through the Computer Image Generator Interface (CIGI) standard as well as the High Level Architecture (HLA) and Distributed Interactive Simulation (DIS) protocols. - SAR Simulation – If your simulator is all set, but you need to simulate and send Synthetic Aperture Radar images to the cockpit, then

RadarFX SAR Server, can generate realistic SAR and ISAR scenes and send them over the network for you to integrate into your cockpit displays.

RadarFX SAR Server, can generate realistic SAR and ISAR scenes and send them over the network for you to integrate into your cockpit displays. - Network Interoperability – Developers who build the Virtual Simulator from scratch can take advantage of

VR‑Link and the

VR‑Link and the  MAK RTI to connect the simulation to the network for interoperation with other simulation applications using the High Level Architecture (HLA), and Distributed Interactive Simulation (DIS) protocols.

MAK RTI to connect the simulation to the network for interoperation with other simulation applications using the High Level Architecture (HLA), and Distributed Interactive Simulation (DIS) protocols.

Image Generators

Image Generators

Where does an Image Generator fit within the system architecture?

Image generators provide visual scenes of the simulation environment from the perspective of the participants. These can be displayed on hardware as simple as a desktop monitor, or as complex as a multiple projector dome display. The scenes can be rendered in the visible spectrum for "out-the-window" views or in other wavelengths to simulate optical sensors. In any case, the Image generator must generate scenes very quickly to maintain a realistic sense of motion.

How does MAK software fit within an Image Generator?

VR-Vantage is the core of MAK Image Generation solution. It uses best-of-breed technologies to custom-tailor high-quality visual scenes of the synthetic environment for your specific simulation needs. VR-Vantage IG can be used as a standalone Image generator, connected to a host simulation via CIGI, DIS, or HLA protocols. VR-Vantage is also used as the rendering engine for all MAKs graphic applications, including VR-Engage (multi-role virtual simulator), VR-Forces (Computer generated forces), and VR-Vantage (battlefield visualization).