Welcome to the MAK Blog!

Stay up to date on the latest news, get the freshest tech tips, and meet our team through MAKer spotlights!

Better Together with AVS and MAK: From LS Core 2.0 to Avalon Airshow and Beyond!

This is a guest article written by MAK’s friend and partner, Paul Rogers, former Australian Army Colonel, from Applied Virtual Simulations (AVS). The LS Core program mentioned below is another great example of MAK ONE being used as a common synthetic environment for collective training, similar to the UK RAF’s Gladiator program.

Imagine training for the battlefield anywhere, anytime, without limitations. Land Simulation Core 2.0 provides the Australian Army with a single simulation platform to prepare soldiers for the real-world challenges of modern warfare.

From Oxfordshire to Oslo: Connecting the MAK Ecosystem Across Europe at MAK ONE User Groups, hosted by ST Engineering Antycip

by Steve Peart, International Sales

Last week, our sister company and exclusive European distributor, ST Engineering Antycip, hosted two MAK ONE User’s Group sessions, bringing together simulation professionals across Europe—one user group in Oxfordshire, England, and the other in Oslo, Norway. The conferences welcomed systems integrators, research developers, and industry experts, all eager to connect, share insights, and explore the latest in modeling and simulation training solutions. I was honored to attend such productive events that fostered collaboration and knowledge-sharing among our community of MAK ONE software users in the UK and Nordic areas!

S2RC Joins the MAK Ecosystem to Drive Innovation

in Simulation and Training

MAK and Stone Solutions + Research Collective (S2RC) have joined forces to push the boundaries of simulation and training. S2RC’s expertise in emerging and disruptive human systems technologies is a perfect match for MAK’s commitment to developing simulation applications and systems. This partnership integrates research, engineering, and training expertise to develop simulation systems that adapt to evolving end-user requirements.

The final installment of MAK's Simulation Solution Superheroes

A few months ago, we introduced round one and round two of our Simulation Solutions Division superheroes. We're back with the final installment of our heroes!

Creativity flows, beauty abounds, and gears are always turning – we’re excited for you to meet more of MAK’s team of Simulation Solutions Superheroes, a formidable force in the realm of simulation technology and solutions.

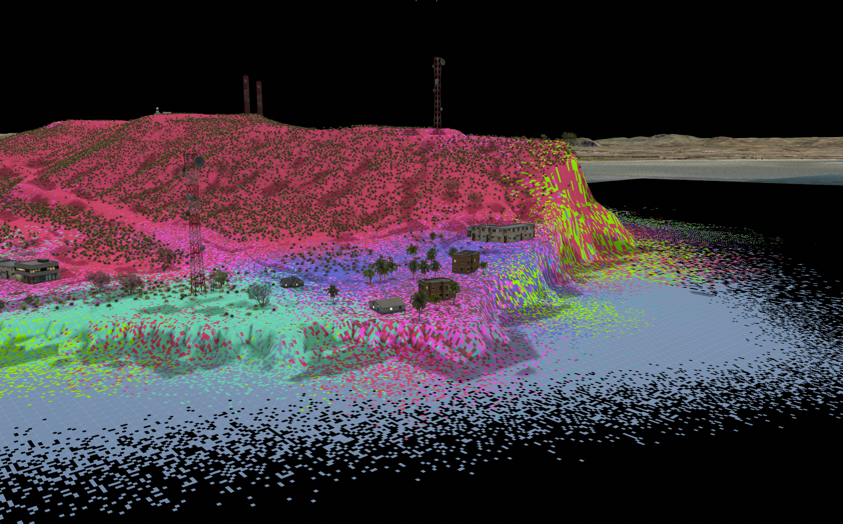

Did you know? MAK supports CDB terrain.

by Jim Kogler, VP of Products

CDB (Common Database) is a terrain standard that uses highly structured and curated GIS data to define the terrain for Synthetic Environments. If you’ve ever worked with raw source data (GIS data), you know it can be all over the place...each source may use slightly different formats and inconsistent attributes, which can make it a headache to manage. CDB solves this problem by using a CDB terrain generation tool to process raw data into a highly structured format, ensuring everything is well-defined and consistent. The tool also allows you to clean up problematic data and integrate your own models into a well-organized directory structure (the database).

Getting the Most Out of MAK Customer Support (We’re Your “Engineer Down the Hall”!)

by Deb Fullford, VP Sales and Business Development

MAK has been successfully competing with government owned and internally developed solutions for over 30 years. This is in part due to MAK’s open API’s and MOSA approach, making it an ideal synthetic environment platform that can be customized to meet end users needs. The other reason for MAK’s continued success is our excellent support. MAK is known to continually go above and beyond to help our customers succeed. As Dan Brockway, MAK’s longtime VP of Marketing, coined, “MAK – Your engineer down the hall.”

Synthetic Aperture Radar (SAR) Imaging

in the MAK ONE Environment

by Len Granowetter, CTO

It’s hard to believe that it was only at I/ITSEC 2023 that I first met the folks from ISL – radar simulation experts that I’ve worked with so closely ever since. Now, only fourteen months later, I’m so proud of the new addition to the MAK ONE ecosystem that our companies have built together: RFView for MAK ONE.

VR-Forces: An Integral Part of the UK Army FORGE Program

by Deb Fullford, VP Sales & Business Development

For the past year, MAK, along with our sister company ST Engineering Antycip, has supported Cervus on the UK Army’s FORGE program. FORGE, a 4-year program, integrates Cervus’ AI Analytics Engine with MAK’s VR-Forces Constructive Simulation to create an AI-based, data-driven, simulation environment to support experimentation for UK Army’s Fifth Generation Force.

Experience a Beautiful World with VR-Vantage

— in Color or Black & White

by Steve Peart, Sr. International Channel Manager

If you’ve been following our recent blog posts, you know we’re gearing up for some exciting showcases at I/ITSEC this year! Our team is bringing a wealth of great demos to the floor, but one of the highlights I’m most excited about showing is our latest release of VR-Vantage (3.1.1).

Breaking Barriers in Training:

NICO’s Leap to 5G-Enabled Augmented Reality!

In collaboration with our partners at Athena-Tek, MAK's Simulation Solutions Division has achieved a major milestone by successfully testing NICO's operation over a private 5G network, demonstrating seamless remote deployment in the most demanding and harsh training environments.

Introducing RFView for MAK ONE

Real-time Ray Tracing Technology to Generate Accurate Radar Images of MAK ONE Simulation Environments

Synthetic Aperture Radar (SAR) imaging has become an indispensable tool for today’s modern militaries. This creates a critical requirement for modeling and simulation engines and platforms to generate simulated SAR images that are both physically accurate and fully correlated with out-the-window views and EO/IR images of your virtual world.

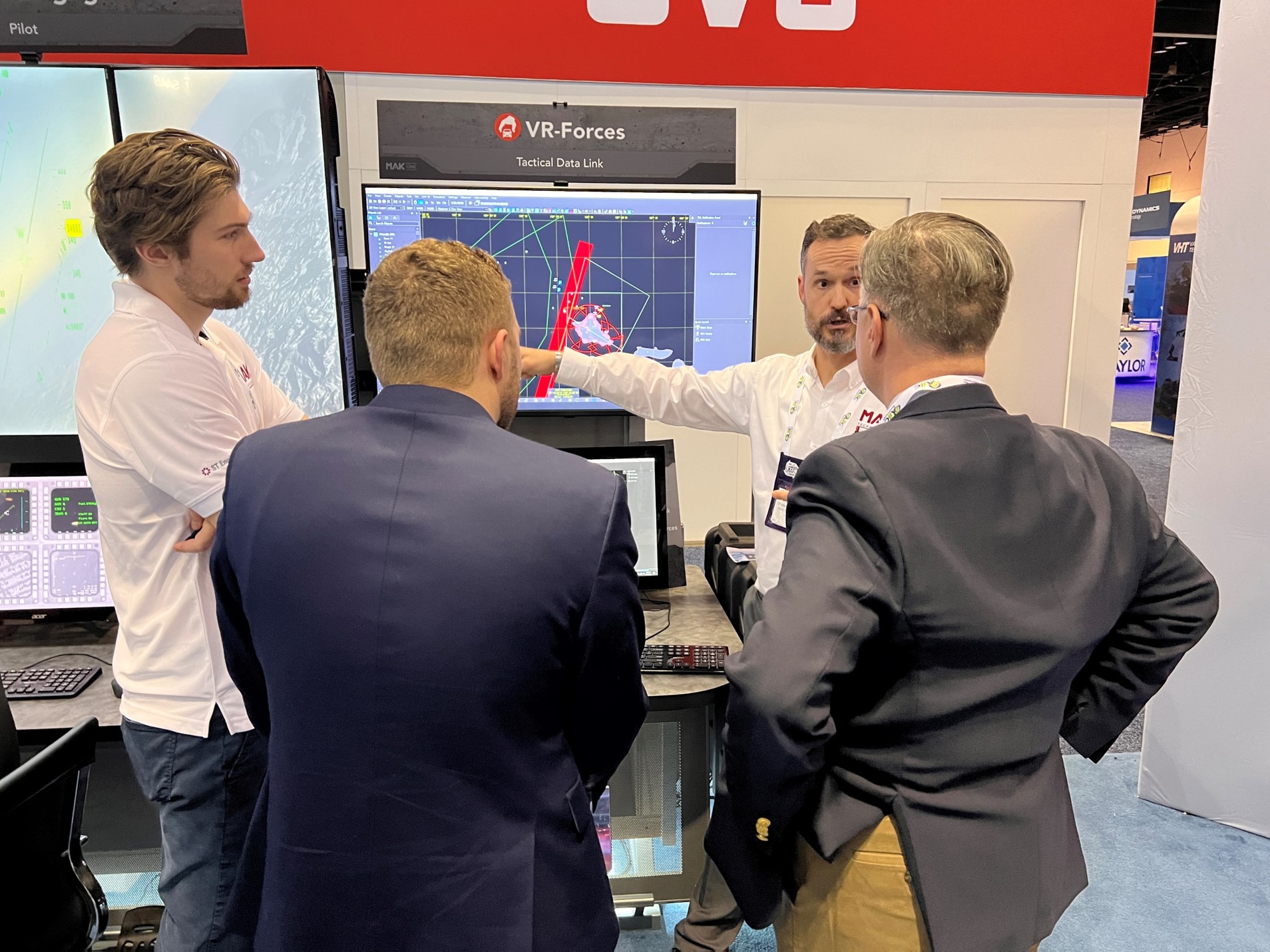

Feast Your Eyes on the Future of Training & Simulation at MAK Booth #1213

MAK has been an I/ITSEC staple for the past 30 years, and this year we're serving up a satisfying blend of our newest innovations alongside our trusted MAK ONE platform.

What's on the menu at MAK booth #1213, you ask?

Continuing the introduction of our Simulation Solutions Superheroes

A few months ago, we introduced the superheroes of our Simulation Solutions Division. (If you missed our first batch of intros, catch those here!) Now we're back with round two so you can meet the next set of our Simulation Solutions superheroes.

Creativity flows, beauty abounds, and gears are always turning – we’re excited for you to meet more of MAK’s team of Simulation Solutions Superheroes, a formidable force in the realm of simulation technology and solutions.

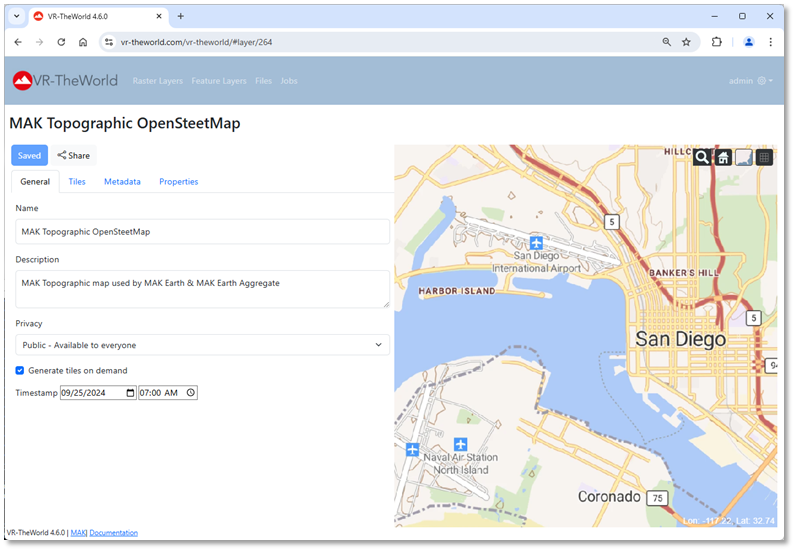

Did you hear? VR-TheWorld 4.6 has arrived! Here are the highlights...

By Brian Spaulding, MAK's Terrain Expert

Drumroll please...with November comes the latest VR-TheWorld release: version 4.6! You can see the list of all the new features in the latest Release Notes, but we'll focus on two important additions in this article:

1. An embedded tile server, OpenStreetMap data in OpenMapTiles format, and sample MapBox GL styles that you can use in Raster layers.

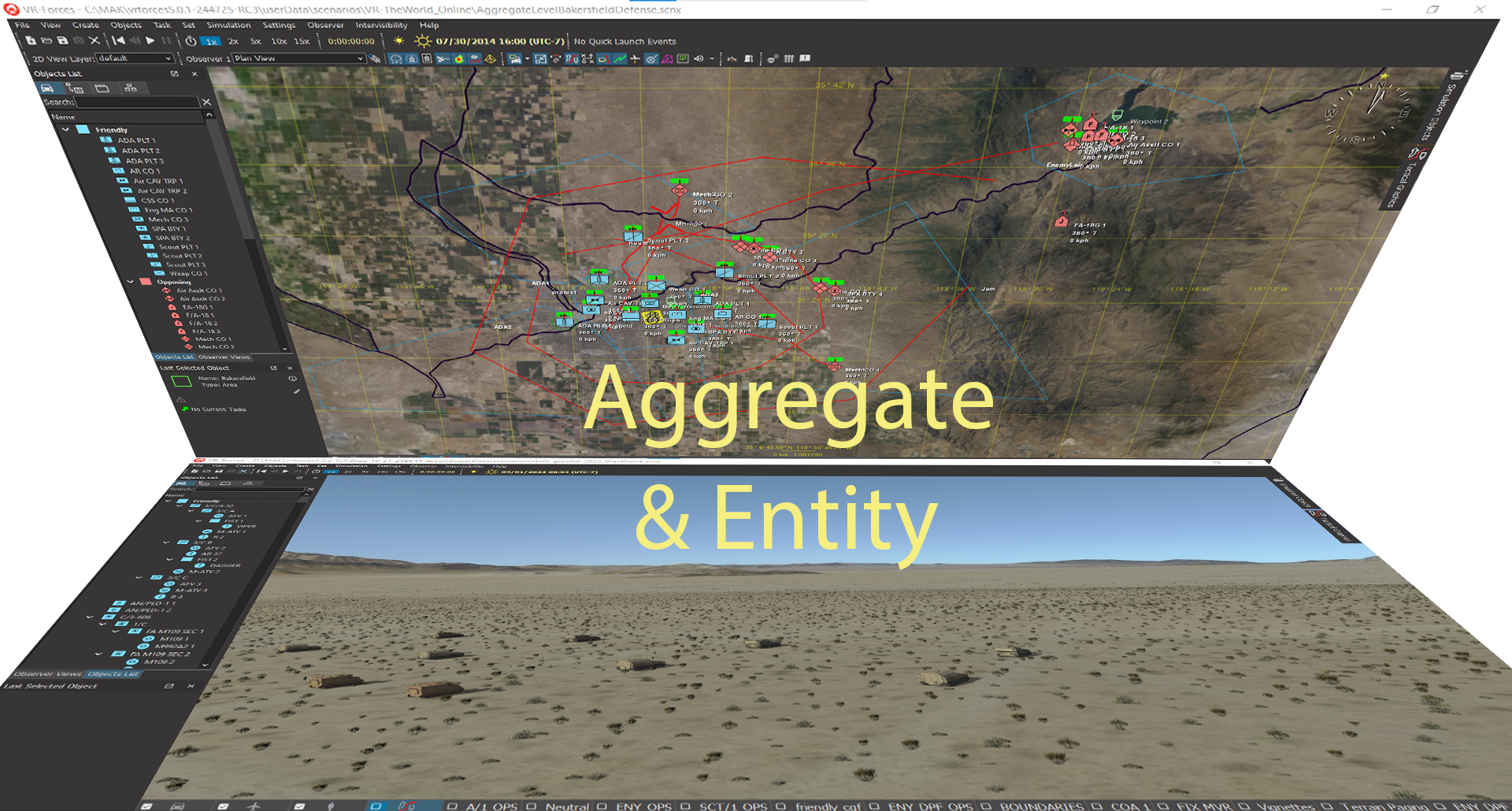

2. Global data additions to support the VR-Forces Aggregate-Level simulation scenarios.

MAK ONE as a Platform: Building a Better Simulation and Training Experience

by Ross Uhler, Director of Strategic Business Development

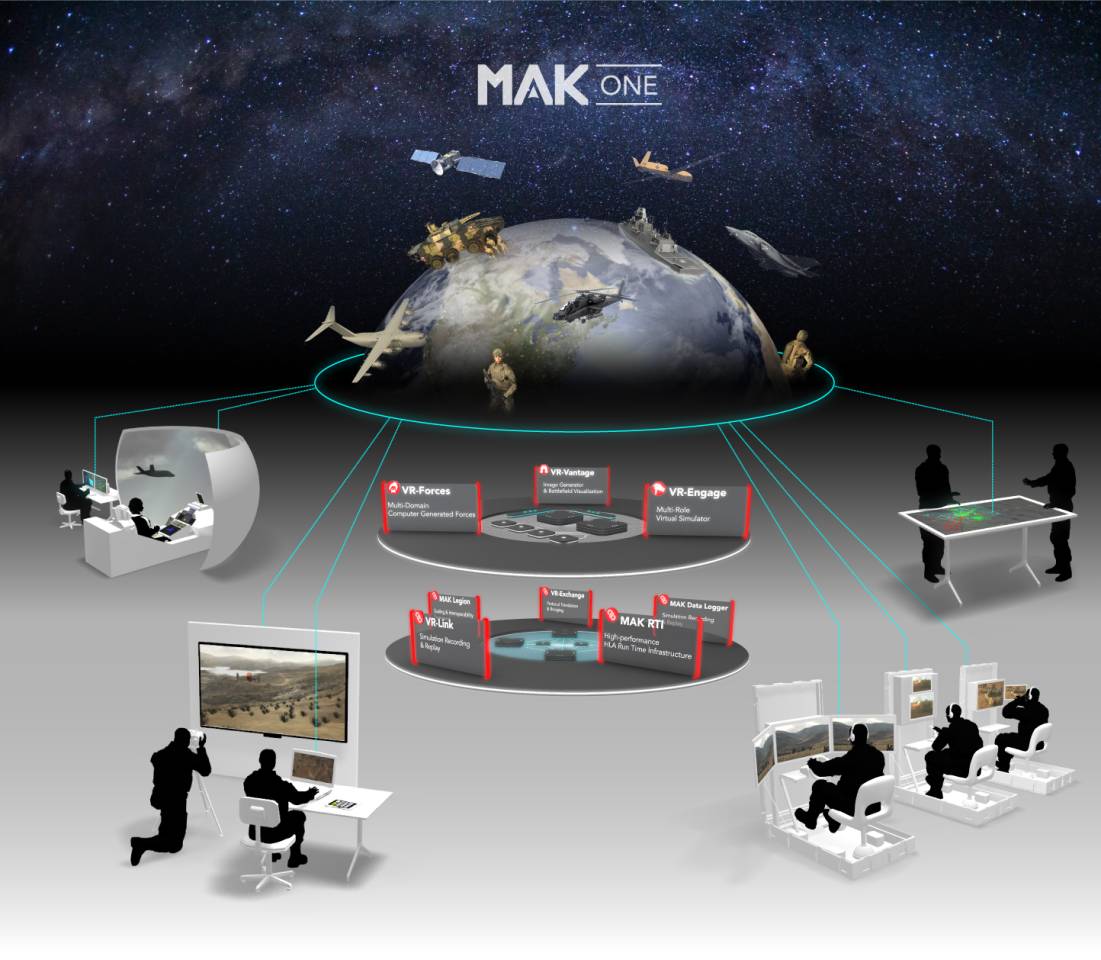

In the early 1990s, IBM introduced the forerunner to today’s smartphone, the IBM Simon. Around the same time, John Morrison And Warren Katz founded MAK Technologies to provide simulation, visualization, and networking solutions for the emerging distributed simulation community. Just as smartphones have evolved over the years, the MAK ONE platform has advanced to deliver a Modular Open System Architecture (MOSA) synthetic environment that provides a tactically accurate, adaptable simulation environment with the flexibility to incorporate new functionality.

Simulation and Reality: Revisiting IITSEC with Newfound Expertise and Mild Trepidation

by Tim Collins, North American Sales

Every year at the tail end of November and very beginning of December, just as most of the country begins its consumerist pilgrimage toward the nearest mall, a very different migration happens in the world of training and simulation. This migration takes place not to crowded sales racks or the fluorescent-lit aisles of Target or Best Buy, but to the many hotels that line either side of International Boulevard and eventually, to the sprawling exhibition halls of the West Concourse within the Orange County Convention Center, home of I/ITSEC.

Adding Non-kinetic Models to VR-Forces

by Pete Swan, Director of International Business

It was my great privilege and pleasure to present at the recent NATO CA2X2 Forum in Rome.

My presentation was about a proof of concept that MAK’s Principal Engineer, Dr. Doug Reece, recently conducted to investigate whether VR-Forces could be used to create non-kinetic models and integrate them into a kinetic simulation environment.

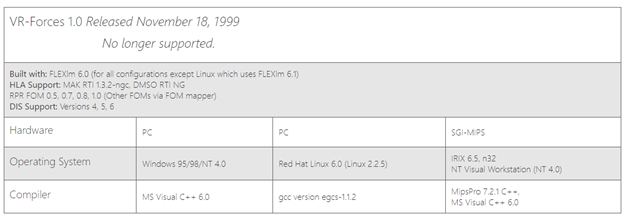

The Story of VR-Forces

by Deb Fullford, VP Sales

Back in 1999 when the world was busy worrying about Y2K, John Morrison, one of MAK’s co-founders, was worrying about how to design a flexible computer-generated forces application that could accurately simulate platforms and be easily extended to incorporate new functionality...

MAK Awarded for Excellence in M&S at BlueTIDE 2024

By Tim Collins

It was an honor to represent MAK alongside Stephen Landry at #BlueTIDE2024 hosted by 401 Tech Bridge last week, and I’m thrilled to share that our work was recognized with the award for Excellence in the Digital Twin Modeling and Simulation category.

What's Up MAK Newsletter: Simulation Solutions Edition

This edition of our What's Up MAK newsletter focuses on our Simulation Solutions team, based in Orlando. You'll learn all about the team's collective and individual superpowers, our new strategic partnership agreement with HTX Labs, our progress on completing Phases 1 and 2 of the Marine Corps ITE Program, and how MAK FIRES is igniting excitement across the U.S.

Enjoy!

The Simulation Solutions Division:

A League of Extraordinary Heroes Dedicated to Customer Success

The Simulation Solutions Division at MAK Technologies harnesses a unique set of superpowers, making them the perfect ally for simulation and training projects big and small. (Watch out, Justice League! There’s a new superhero team in town.)

HTX Labs and MAK Technologies Partnership Announcement

The teams at HTX Labs and MAK Technologies are excited to announce our strategic partnership to elevate military training and simulation with XR and AI solutions!

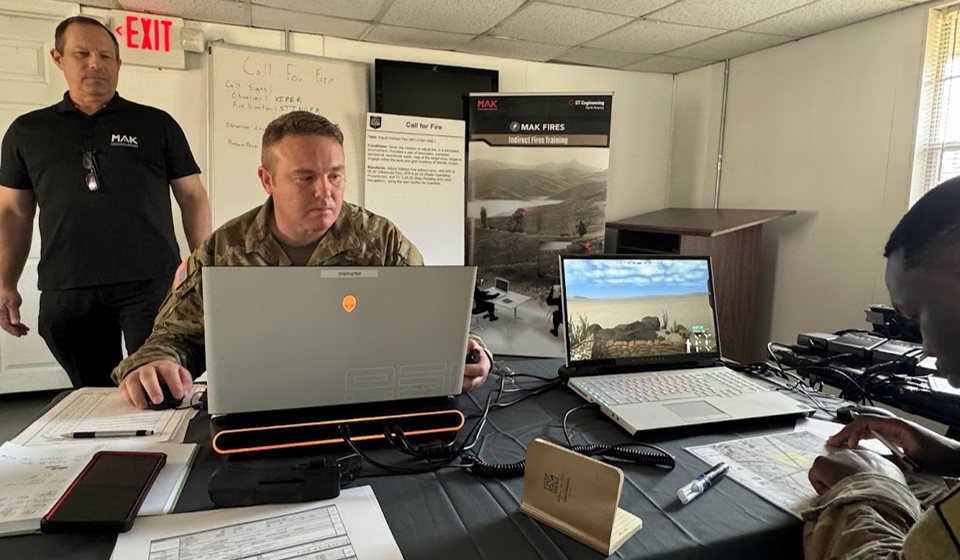

MAK FIRES Ignites Excitement across the U.S.

We're excited by the traction MAK FIRES, our portable and realistic Forward Observer Training System, is gaining nationwide. Here are some recent successes and upcoming plans to showcase MAK FIRES...

Dive Deep into Terrain with Brian Spaulding

We just hosted a technical webinar about terrain in our "Get Deep with MAK" Technical webinar series. In this session, MAK’s terrain expert, Brian Spaulding, explores VR-TheWorld and demonstrates how to augment a Whole World terrain with commonly available GIS data. Specifically, he walks through two topics:

What's Up MAK Newsletter: Get Deep with MAK!

This edition of our What's Up MAK newsletter features our upcoming technical webinar series "Get Deep with MAK", outlines what's new in VR-TheWorld, explores a bit of show and tell with some newly released MAK videos, and shows how sensor operator training can be done in MAK ONE! Enjoy!

Five things to know about VR-TheWorld 4.3...

To celebrate the latest release of VR-TheWorld, here are five things everyone should know:

What's Up MAK Newsletter: What's New in MAK ONE 2024?

This edition of our What's Up MAK newsletter is all about our MAK ONE 2024 Product Release — we'll kick things off with a fireside chat with Jim Kogler, VP of Products. And keep scrolling to find more release-focused resources, events, and news, as well as MAK ONE customer stories, industry news, and open jobs at MAK. Enjoy!

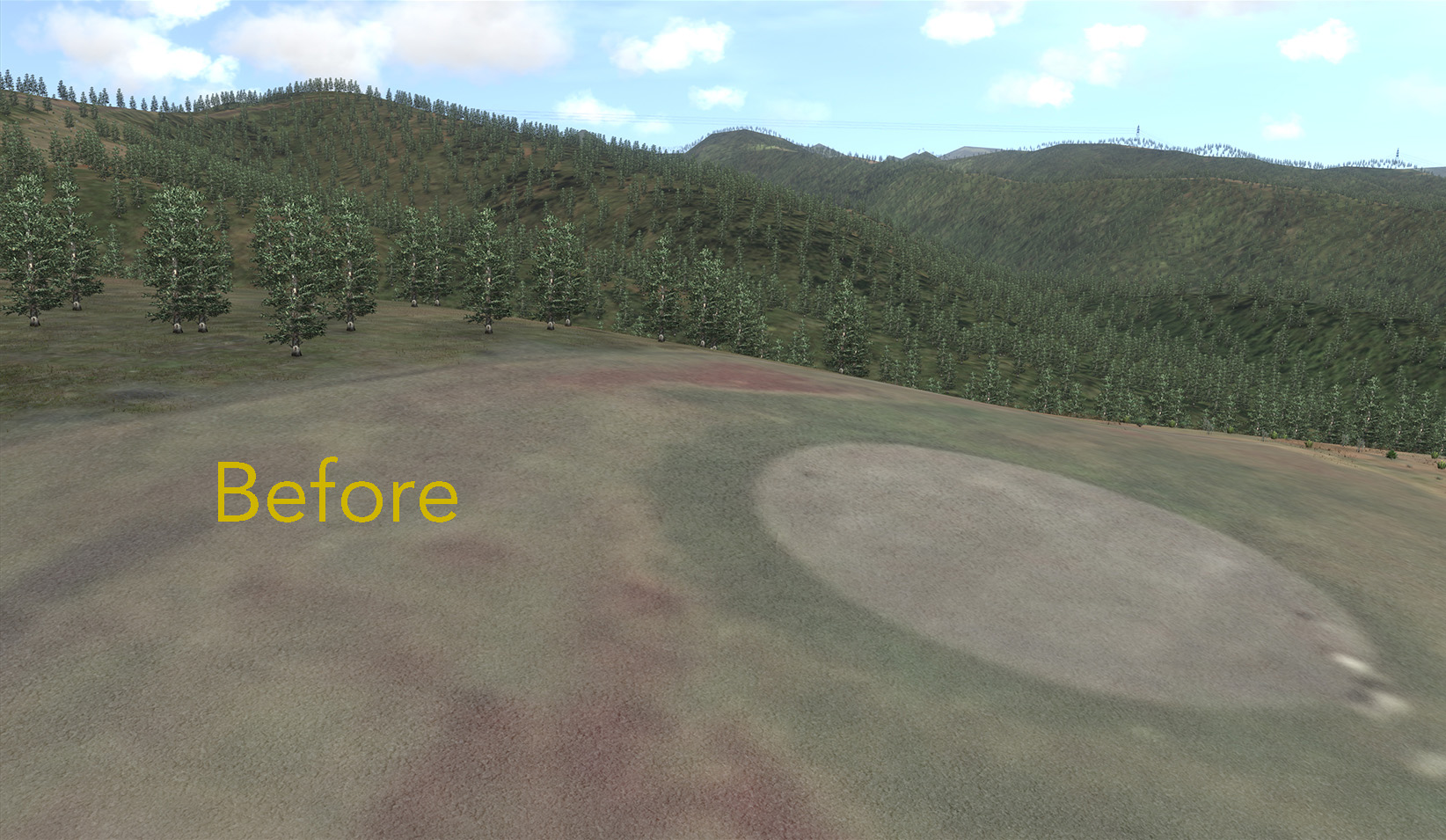

Vegetation in the MAK Earth Terrain Engine!

Why Generative AI is worse than Procedural Generation — because sometimes you don’t want machines to “think”, you want them to do what they’re told.

This is the story of MAK ONE’s ability to generate deterministic terrain vegetation over the entire planet.

(Virtual) Fireside Chat with Jim Kogler about MAK ONE

Spring is just around the corner, but it’s always the right season for a [virtual] fireside chat with Jim about what’s new in this release of MAK ONE.

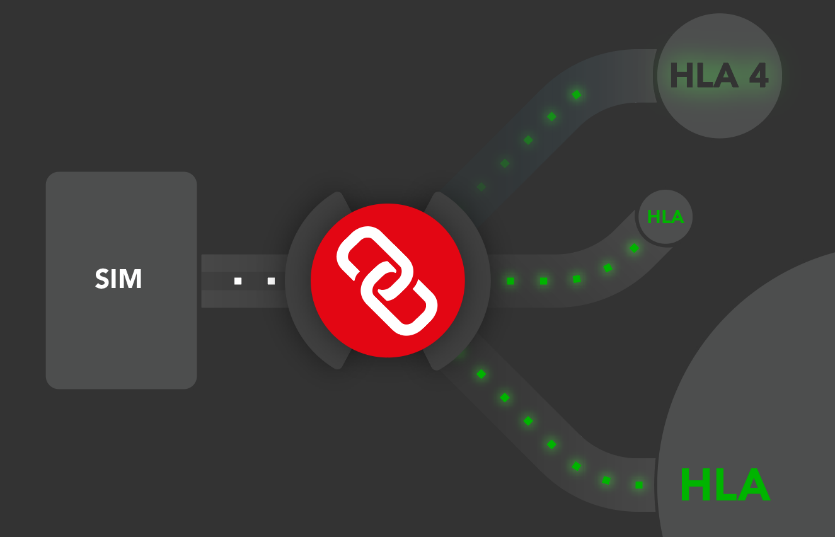

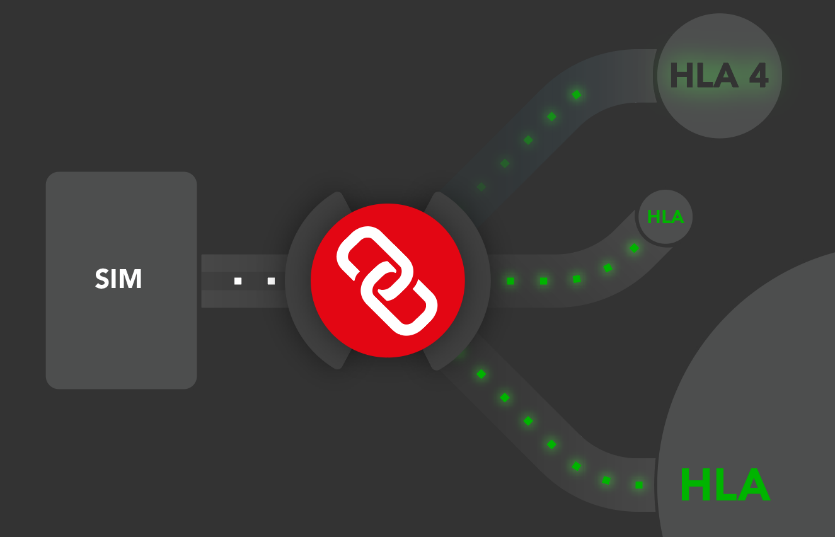

Here's what's new in HLA 4

As we highlighted last year, HLA 4 has passed the first round of IEEE balloting. MAK is proud to have worked diligently alongside the interoperability community to progress the open standards process to reach this milestone.

Keep reading to learn what's new in HLA 4...

NETN FOM Support in MAK ONE

by Jim Kogler

Coming with the MAK ONE 2024 release, the MAK ONE suite will start using the NETN (or NATO) FOM for all default HLA-based connections. NETN (NATO Education and Training Network) FOM is the NATO Distributed Simulation FOM, which was developed by the NATO Modeling and Simulation Group (MNSG) and is available online (NETN FOM (amsp-04.github.io).

What's Up MAK Newsletter: Happy Holidays from MAK!

Whether you're spending the holidays in a snowy mountain chalet or keeping things cozy at home, we wish you and yours a holiday season filled with joy and togetherness. May the coming year bring prosperity, success, and continued collaboration as we embark on new and continued ventures together!

Enjoy this final newsletter of 2023. And spoiler alert, there's a gift for you inside...Check it out!

Here's to a bright year ahead,

Your friends at MAK

NTSA Modeling and Simulation Awards: Gladiator Delivery Team wins Training Systems Acquisition Award!

Congratulations to the entire Gladiator Delivery Team for winning NTSA’s Training Systems Acquisition Award!

The Gladiator Program represents a shift in paradigm for delivering collective synthetic training environments. The UK RAF, with the help of Prime Contractor, Boeing Defense UK and Subcontractor ST Engineering Antycip, brought together commercial companies to work alongside a professional White Force to develop a COTS-based Collective MDO Training Environment.

MAK Technologies: Where Experience Meets Innovation

You know we’ve got the best software engineers in the business. But did you know that in addition to amazing coders, we’ve also got a team full of 3D artists, military veteran SMEs from every branch, and top simulation industry professionals? With the MAK team’s combined experience, we've...

Hot off the press: The Q3 What's Up MAK Newsletter

Topics include:

- Standing the Test of Time with MAK ONE

- VR-Engage Product Announcement and Tech Tip

- Lunch with MAK Is Back!

- MAK Technologies: Where Experience Meets Innovation

- MAK Around the World

- ICYMI: In Case You Missed It – MAK success stories and news

Open Letter to Presagis Users

By Dan Brockway

Many of you have probably heard about the absorption of Presagis into CAE, and the discontinuation of Presagis products.

If you are a customer of Presagis products and you are looking for an alternative solution, we at MAK Technologies stand ready to help. Our product suite is perhaps the most similar in breadth and scope to the Presagis suite as any other vendor on the market. Our companies grew up together and have shared many common distributors, resellers, and local support partners over the years. We have also shared many of the same philosophies toward building flexible products with APIs for user extension and customization.

Is your modeling and simulation technology at end-of-life?

Now's the time to look at MAK ONE. MAK ONE is a modular architecture that can be your whole synthetic environment or fill the gaps in your system architecture.

How to Configure MAK ONE’s VR-Engage Virtual Simulator with Accurate Vehicle Dynamics based on an Existing Model

By Len Granowetter

MAK’s VR-Engage virtual simulator application, part of the MAK ONE suite, includes a built-in vehicle dynamics simulation, using Vortex, by CM Labs. Vortex is a mature and powerful COTS physics and dynamics engine that provides a simple authoring UI, allowing users to model at whatever fidelity is desired – including modeling of multi-axle drive and steering systems, dynamic loads, wheeled and tracked systems, physics of turrets and other parts, etc. However, the MAK ONE platform is also capable of using pre-existing dynamics models and external code that is not based on the Vortex physics engine. Here's how to configure VR-Engage with accurate vehicle dynamics based on an existing model.

VR-Engage 2.0.3 has arrived! Here is what's new.

By Len Granowetter

We just announced our mid-2023 set of maintenance releases for the MAK ONE applications – VR-Forces, VR-Vantage, and VR-Engage. For the most part, maintenance releases are about bug fixes, optimizations, and other minor configuration changes that make our customers’ experience better. But sometimes, maintenance releases also include some targeted new capabilities that are additive or low-impact - that’s the case with the VR-Engage 2.0.3 release.

The Q2 2023 What's Up MAK Newsletter is hot off the press!

Topics include:

- MAK's upcoming charity event: Join us at our Dogs fore! Vets Topgolf Orlando Charity event on June 22 from 6-9pm

- A customer success! The Naval Postgraduate School’s (NPS) MOVES Institute Relies on MAK ONE to Help Prepare Students for Simulation Success

- A brand new product video: VR-Engage, the MAK ONE Multi-Domain Multi-role Virtual Simulator

- MAK in the wild: Len Granowetter's debut on the Warfighter Podcast, "What's all the fuss about platforms?"

- AI: friend or foe? An Experiment with ChatGPT Customer Support by Jim Kogler

- MAK news:

- HLA 4 Passes the First Round of IEEE Balloting

- MAK Earth - An Update on Bing Maps

- Addressing Issues Caused by NVIDIA Driver Bugs

- Tech Tips:

- Applying Shader Effects to Map Layers

- Squashing Bugs

An experiment with ChatGPT-Powered Customer Support

By Jim Kogler (**edited by ChatGPT, Morgan, and Dan)

I have been approached by multiple customers suggesting the idea of developing a support system by training an OpenAI/Chat-GPT system on our MAK documentation set. They believed it could potentially provide some level of autonomous help desk for our customers. Intrigued by the possibility of automating support, I decided to give it a try. However, I must say it hasn't gone well, and as a result, we won't be releasing it just yet.

Learn how AI can sometimes be misleading...

Open Standards Milestone: HLA 4 passes IEEE balloting

HLA 4 passed the first round of IEEE balloting! MAK is proud to have worked diligently alongside the interoperability community to progress the open standards process to reach this HLA 4 milestone. We're looking forward to the finalization of the standard and implementing it into our MAK ONE suite of simulation software.

MAK Earth – An Update on Bing Maps

By Dan E. Brockway

Earlier in my career, I worked on a simulator project where we paid $1,200,000 for 5-meter imagery covering the gaming areas with 0.5-meter imagery over just the airports and target areas. Back then there were no monstrously large internet mapping companies who have collected high-resolution imagery of the whole planet and made it available on the internet. Now, your simulators can be filled with Bing Maps imagery for a fraction of that cost. You can even try it out for free.

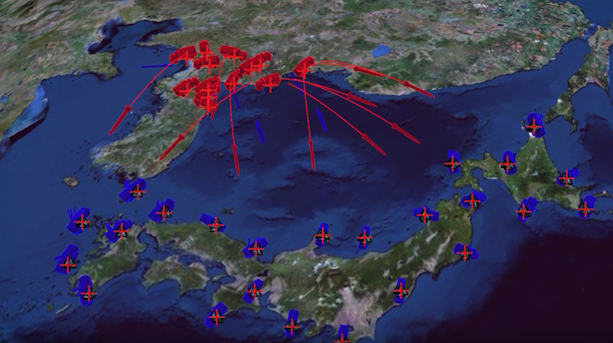

Wargaming to Build a Shared Vision of the Battle

“If commanders and staffs are to integrate or synchronize the detailed decisions and activities of the complex battlefield then they must have the same image of the battle. This image must be constructed during wargaming”, said Major John E. Frame in the Spring 1996 issue of the Army’s Field Artillery Journal, as he succinctly described the critical link between wargaming and visualization.

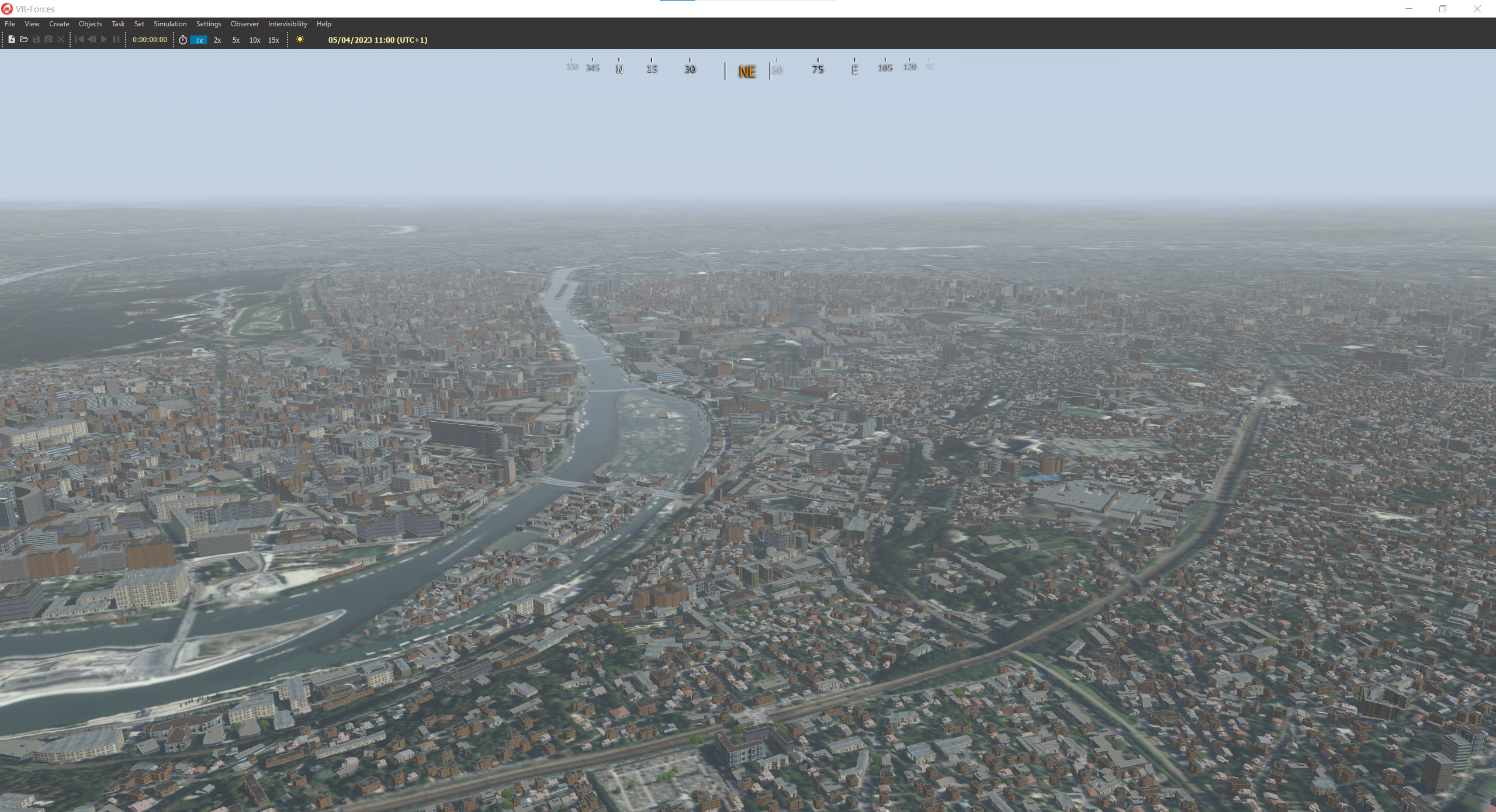

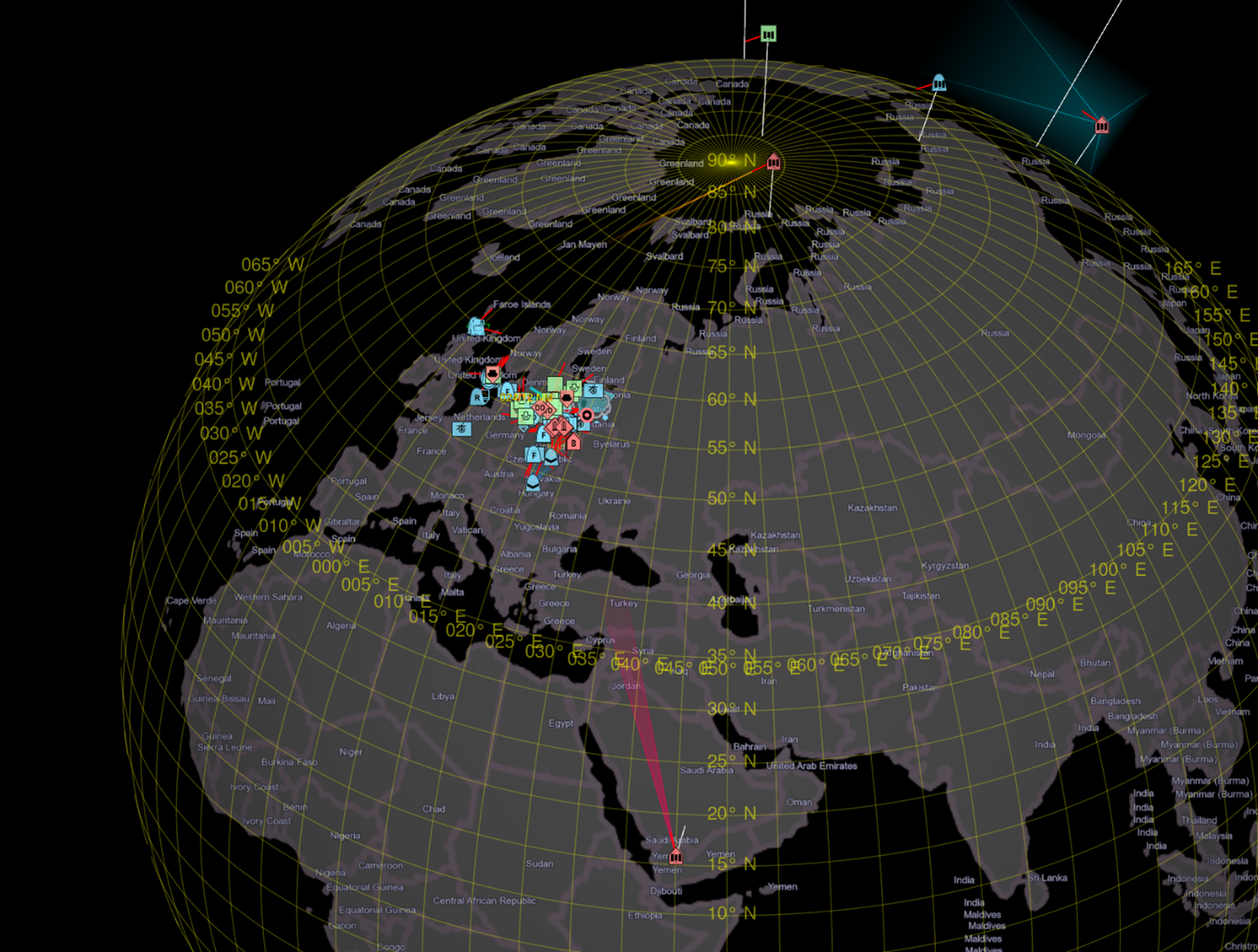

Modern simulation systems (such as MAK ONE) can model the conditions developed in the Course of Action Development phase of the Military Decision Making Process (MDMP) to bring Synthetic Environments and 3D graphics to bear as an aid in the development of a common operational picture.

Addressing Issues Caused by NVIDIA Driver Bugs

By Jim Kogler

Some of our users may have experienced crashes while using MAK ONE products with NVIDIA drivers released after November 2022. These crashes have been caused by a bug in the NVIDIA driver related to reference counting of bindless textures when using multiple graphics contexts. I’ll outline how we'll address this issue below, but first, some background.

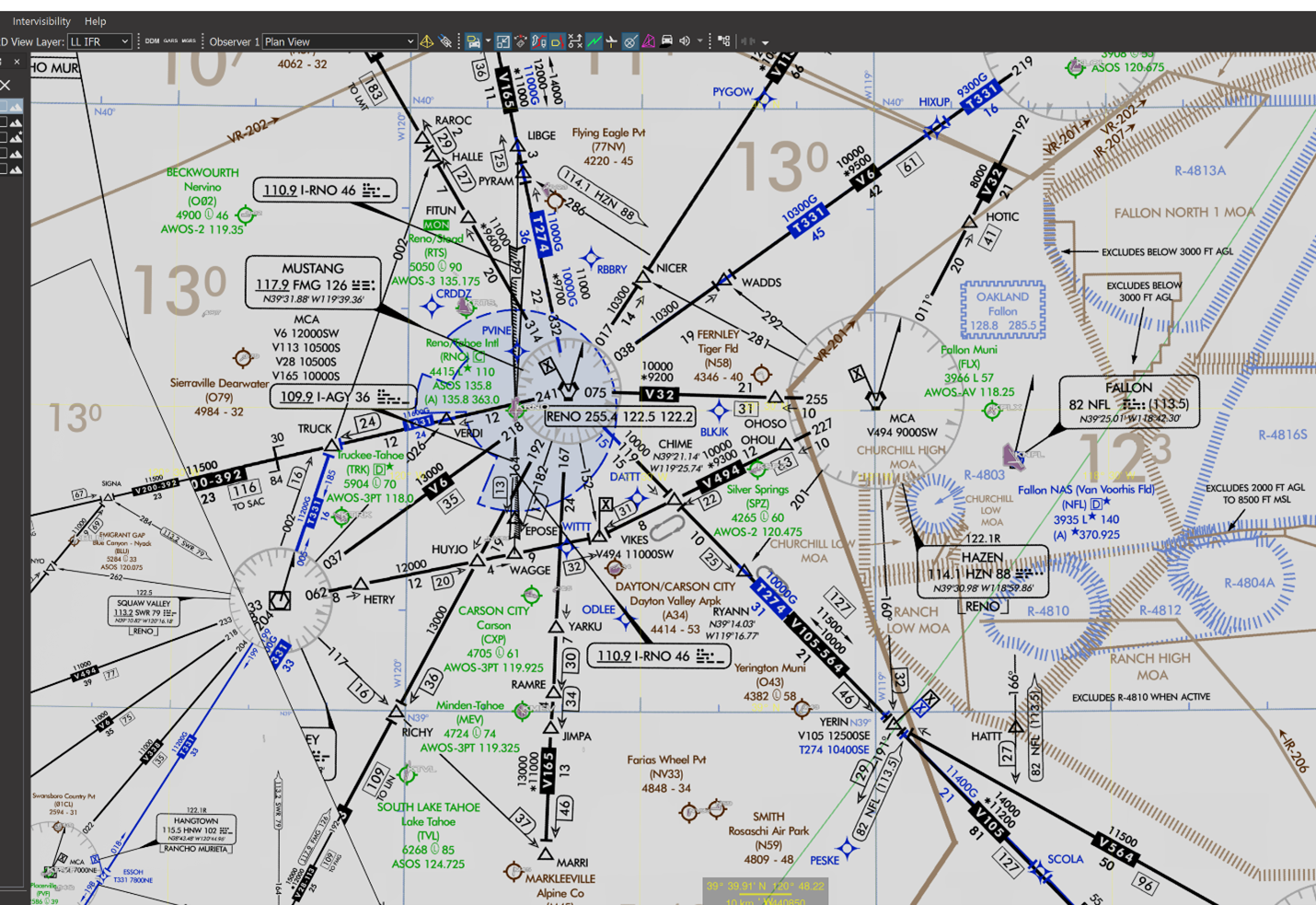

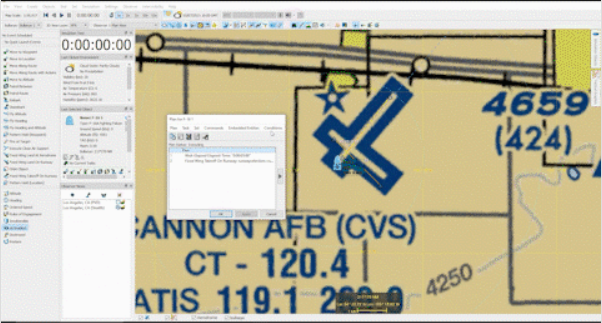

Tech Tip: Applying Shader Effects to Map Layers

by Jim Kogler

For you hotshot programmers out there, did you know you could apply a shader effect to your MAK ONE map layers?

If you have a map layer that looks like the image above (IFR Air Charts) and the color scheme makes it difficult to see your plans, you have a few options...

MAK Earth is a wonderful place. And it just keeps getting better!

The latest generation of the MAK ONE procedural terrain engine increases the biodiversity of the Synthetic Environment.

Click and/or mouse over the images to see how rich and biome specific the environment has become:

Out of this world

by Jim Kogler

Knowing where you are in a whole earth multi-domain simulation can be hard...

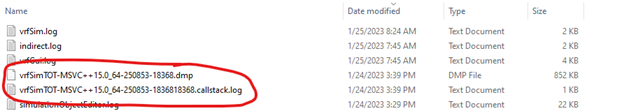

Squashing Bugs

by Jim Kogler

MAK ONE products are amazingly beautiful and complicated things. VR-Forces is built from millions of lines of code and is designed to simulate the planet. As a result, the number of things our customers can do is extensive – the sky is the limit (well, outer space is the limit)! MAK runs our products through Quality Assurance (QA), of course, but the sheer number of ways people can build a scenario and interact with a product like VR-Forces prevents us from testing absolutely everything. MAK uses a combination of automated and manual testing to try to find hidden bugs before you do. But sometimes we fail, and a crash bug makes it through testing and is found by customers.

What happens then?

What's up MAK: Letter from the CEO, Bill Cole

Friends, Customers, Partners,

Just over four years ago, I switched from using simulation software as a Soldier to leading MAK Technologies, one of the industry’s longest-running commercial providers of simulation tools and capabilities. I am continually energized by MAK’s momentum toward innovating, improving, and serving our customers!

You'll find a link to our quarterly newsletter, What's Up MAK, below but first, here are a few key themes and trends I’ve observed during my time at MAK so far.

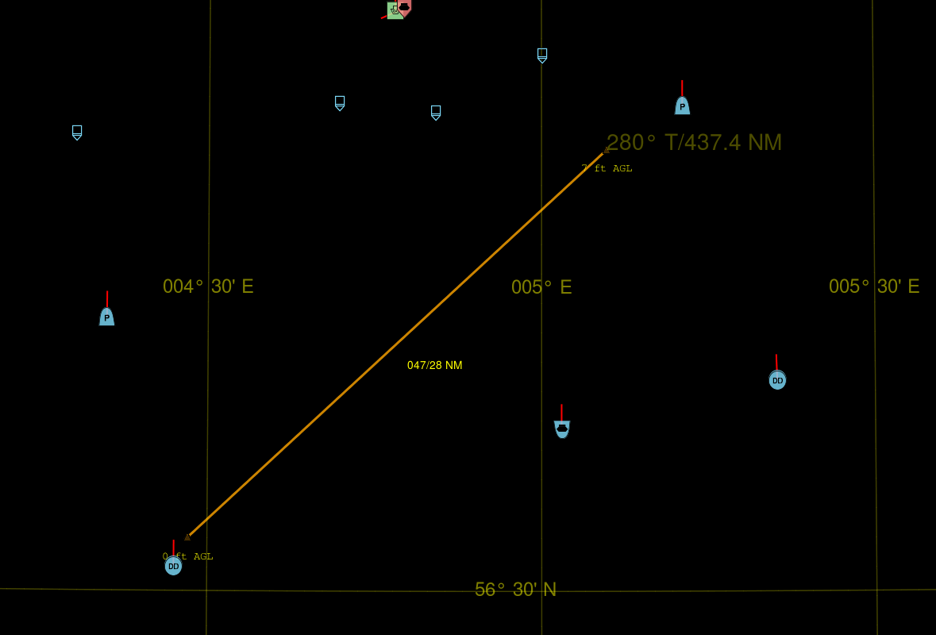

Tech Tip: Range Bearing Lines in VR-Forces 5.0.2

by Jim Kogler

We seldom add new features in a maintenance release, but on very rare occasions a simple one slips in. If you didn’t carefully read the release notes, you may have missed this.

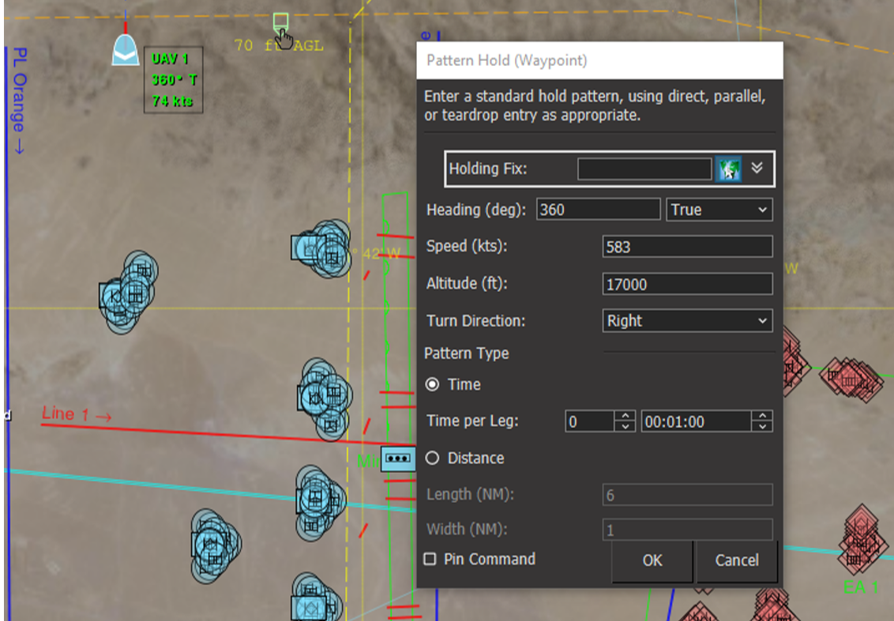

Tech Tip: Create Tactical Graphics When You Need Them

by Jim Kogler

Routes and waypoints are the bread and butter of VR-Forces scenario planning. Sometimes you end up in a situation where you task an entity to do something at a waypoint (or along a route) and you realize you forgot to create the waypoint – but the tasking dialog is already up and half-filled in. Here's a quick Tech Tip on how to create tactical graphics right when you need them.

HLA 1.3 and HLA IEEE 1516-2000 (DCL API) Support Ending for MAK ONE Applications

by Jim Kogler

Starting with the MAK ONE 2023 release cycle, MAK ONE applications will no longer support HLA 1.3 or IEEE-1516.2000 (DLC API). These changes will NOT impact MAK ONE Infrastructure products including the MAK RTI, VR-Link, VR-Exchange, the MAK Data Logger, or the MAK WebLVC Server. Libraries for HLA 1.3 and 1516-2000 will continue to be available.

Multi-Resolution Modeling with MAK ONE

by Dan Brockway

Maybe you already know that VR-Forces can simulate at both the Entity-level and the Aggregate-level. But did you know that VR-Forces can simulate both models at the same time — in the same exercise?

SIW Pre-read: MAK’s February 2023 Simulation Standards Activity Update

by Matt Figueroa

MAK Technologies is known for its continuous work within the Modeling and Simulation community and as part of groups like SISO (Simulation Interoperability Standards Organization) to improve the standards and protocols that our customers and programs rely on. With SIW around the corner, we wanted to highlight some recent standards activity that MAK has been involved in...

The MAK ONE Release Versioning Convention: Keeping Track of the Changes

by Jim Kogler

Today I want to talk about MAK’s versioning conventions and release process that helps us and our customers keep track of the changes and improvements. Specifically, patches. What is a patch? What causes MAK to release one? And finally, should customers use them?

The countdown to I/ITSEC 2022 is on!

MAK is excited and ready to showcase our MAK ONE suite of simulation software in-person at I/ITSEC 2022! When you stop by our booth (#1413), you’ll experience a whole-world synthetic environment for modeling, simulation and training in any domain as well as in multi-domain operations, including training solutions built with MAK ONE. Our team can't wait to connect with you face-to-face!

Pakistan Air Force Chooses MAK ONE

Cambridge, MA, October 21, 2022 - MAK Technologies (MAK), a company of ST Engineering North America, today announced that the MAK ONE suite of simulation software, including VR-Forces, has been selected for use in Pakistan Air Force’s (PAF) simulators for tactical training as part of its Composite Simulation Centre's Synthetic Battlefield Environment.

Brand New and Lasts Forever

I was at a conference a few years ago where I heard a program manager speak about "the next" simulation system that would take care of all the problems of the current simulation systems and save the future from having to ever do this again. I've heard this same speech dozens of times in my career.

Everyone wants a simulation system that is Brand New and Lasts Forever. (This should be the title of my next ebook.)

MAK Selected to Provide Common Simulation Software System for the LS Core 2.0 Program

Cambridge, MA, April 13, 2022 - MAK Technologies (MAK), a company of ST Engineering North America, today announced that the MAK ONE suite of products has been selected to provide the common simulation software system for the Australian Army’s Land Systems (LS) Core 2.0 Program.

MAKer Spotlight: Chris Gullette, Principal Engineer

We're excited to introduce you to Chris Gullette, a principal engineer at MAK who is our technical lead for the MAK FIRES Call for Fire training system. Chris is responsible for the architecture and design of MAK FIRES, and is instrumental in managing new features, undertaking the planning required to get them integrated into the product software, and then developing and implementing those new features. Recently, his role has carried him around the US to communicate with potential customers and solicit feedback to ensure that MAK FIRES meets the training needs of our users.

MAK Appoints Alicia Combs as Vice President of Training Solutions

We are excited to announce the appointment of Alicia Combs as Vice President of our new Training Solutions division, located in Orlando, Florida. In her new role, Alicia is responsible for managing MAK's entire portfolio of training solution programs, including determining strategic direction, overseeing resource coordination between programs, and managing projects and business development support.

Epic News: MAK Modeling & Simulation Testbed Receives Epic MegaGrant!

By Len Granowetter

We're excited to share that MAK has been given an Epic MegaGrant! MAK will leverage this support from Epic Games to help achieve greater correlation and interoperability between MAK's products and external applications that are built on Epic's Unreal Engine.

Although many of our customers build their synthetic environments entirely on the MAK ONE suite of products, it has always been our strategy to empower customers to combine MAK products with third-party engines and applications to create the most powerful and complex simulation systems.

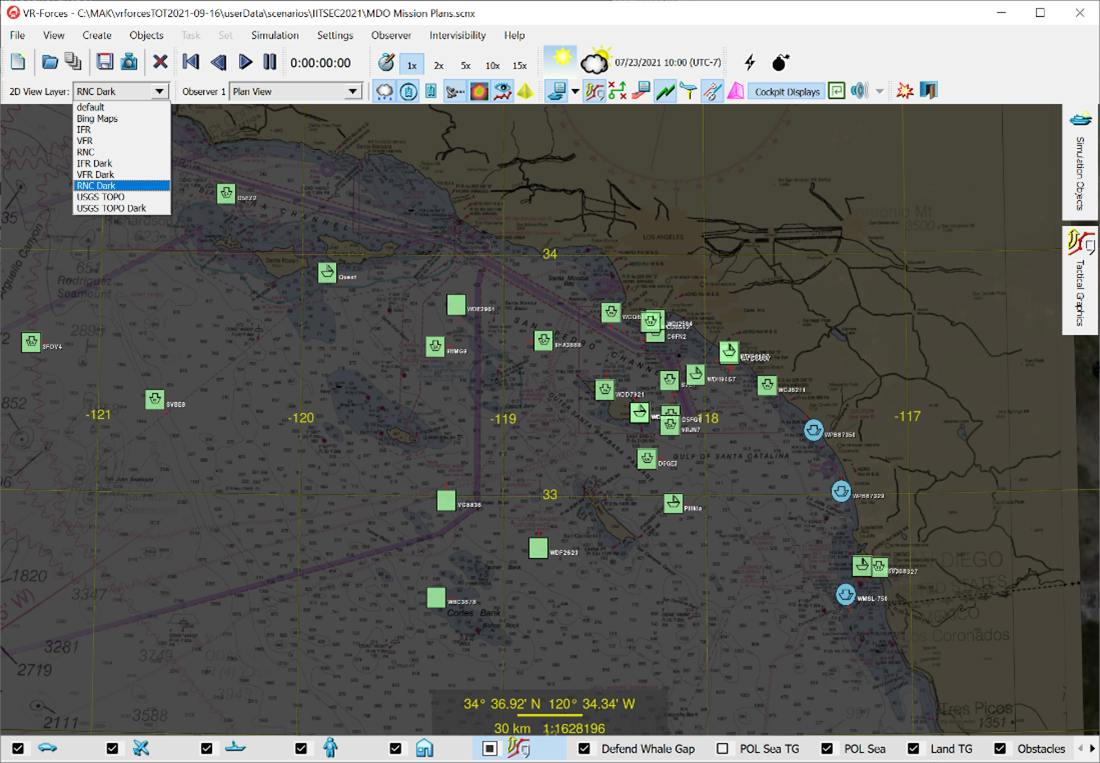

Tech Tip: Creating domain-specific 2D map views in VR-Forces

By controlling layers within your terrain definition, you can have 2D map views that are specific to each simulation domain. Here is an example scenario that contains saved views for three domains: Air, Land, and Sea.

One saved view uses a "2D Layer View" that specifies Nautical charts:

MAK ONE: Planet Earth’s “Digital Twin” for Training and Experimentation

The world is an infinitely complex place, and interactions between people, systems, and the environment are exceedingly difficult to model. Historically, simulation solutions reduce these complexities to the bare minimum so that an environmental representation can be achieved within the limits of the current technology. This approach is certainly necessary. It enables us to develop focused tactics, techniques, and procedures training simulators, to assist "low-level units [to be] masters of their craft" as General McConville said at a presentation to the Association of the US Army (AUSA).

A New Thread: Aerodromes in MAK ONE

The MAK ONE Road to Release

Earlier this year, I shared the MAK ONE Tapestry story about how a mature product evolves at MAK. With each release, there are threads of capability that are wrapped up, others that continue on, and others still that are just beginning and will bring new benefits for releases to come.

I'd like to update you on where we are today.

Iteration Enables Quality

I have spent most of my career working to make better and better virtual worlds to create increasingly realistic immersive simulation environments. One concept I’ve learned, over and over again, is that Iteration Creates Quality.

Estimating the tactical impact of robot swarms using VR-Forces

This article was written in collaboration with Dr. Kevin Foster at the University of Alabama in Huntsville.

Militaries are developing swarms of autonomous robotic Unmanned Aircraft Systems (UAS) to conduct missions that are too hazardous for human crews or expensive robotic systems. Using MAK's VR-Forces CGF, researchers at the University of Alabama in Huntsville (UAH) are conducting experiments to determine if a simulated model of a robot swarm can improve military swarm tactics.

MAK Legion Update: Legion 1.1 and the SISO Study Group

By Matt Figueroa

A few months ago, Len Granowetter outlined our MAK Legion technology, which enables large-scale simulations that support thousands or even millions of high fidelity entities. I'm providing an update on where MAK Legion is today and a look at where we're taking it.

Tech Tip: Use this new command to easily create time-based scenarios

By Nathan Kidd

This month's tech tip is about an elegant and powerful feature in VR-Forces. The new plan language command, Do Until Interrupt, greatly simplifies the creation of entity plans and helps automate accurate tasking relative to your preferred "interrupt" conditions, such as the Simulation Time or Date, Time of Day, Scenario Event, Receive a Text Message, and more. By choosing a single reference point for multiple entities with the Do Until Interrupt feature, VR-Forces users are now able to more simply and intuitively build time-based scenarios. This also allows a user to easily specify an ending condition for VR-Forces tasks that are normally indefinite duration, such as Civilian Wander.

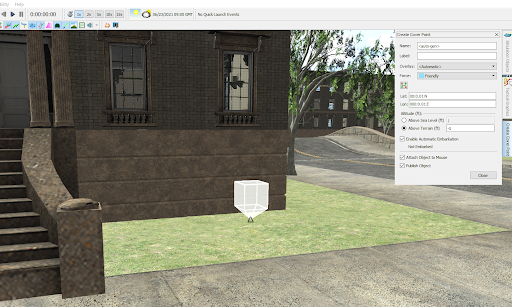

Tech tip: Configuring VR-Forces path planning to support cover points

In VR-Forces, soldiers are capable of taking cover from enemies behind obstacles, such as buildings, trees, rocks or anything else sufficiently large enough in the terrain to shield themselves. When they do, it's important that they react and move immediately so they can quickly reach safety. To speed up the process of evaluating many possible cover locations at run-time, cover points are pre-generated as part of the Navigation Data generation process in VR-Forces. By default, all of the Navigation Data provided with MAK terrains already contains cover points for lifeforms, primarily humans, but not for vehicles. Cover point generation can be configured in the navigationProfiles.mtl configuration file found in appData\settings\vrfSim.

MAK Down Under!

From Infantry Fighting Vehicle Simulators to Reconfigurable Driver Simulators, here's a peek at how MAK is supporting our Australian partners and customers.

MAK is proud to serve our customers and partners worldwide. This month, we're zooming in on Australia to highlight recent collaborations that we're most proud of, particularly our partnership with Applied Virtual Simulation (AVS) to display its Infantry Fighting Vehicle Simulator at the recent Land Forces Conference and our work with Universal Motion Simulation (UMS) to develop the Reconfigurable Driver Simulator (RDS).

MAK Earth presents: the Guam Terrain

MAK Earth now includes increased resolution for the Island of Guam and the surrounding waters (bathymetry) and islands. So if you're simulating operations in the Western Pacific Rim and need to stage assets on US territory, Guam ("Where America's Day Begins") could be an ideal location for your scenarios. Take a look at this virtual tour video of Guam.

Tech Tip: Configuring specular reflections to add realism

For this month's tech tip, we'll cover how to configure your environment to make scenarios richer and more realistic by adding specular reflections to inland water. Specular effects produce the shiny, reflective water surface that you're used to seeing in the real world. Since inland water terrain is usually just a flat area of elevation with an image of water, adding specular reflections increases realism and provides an important visual cue for aircraft.

MAK FIRES: Individual training in the context of a multi-domain operation

For more than 30 years, MAK has been providing commercial software products used by customers, just like you, to create simulated environments for concept development, research, design, and training. Recently, MAK expanded our staff to include experts in the design, development, and implementation of training solutions to train individual skills in a collective training environment. MAK's mission has always been to be a partner, not just a vendor. Our Training Solution Division expands the solutions we can offer to minimize your risk and program cost, and help you achieve program success.

Our first Training Solution offering is MAK FIRES, a comprehensive and immersive training system that provides realistic training for Call for Fire (CFF) missions. MAK's own Bill Kamer, a retired Bradley Master Gunner, was the inspiration behind MAK Fires. Immediately upon joining the MAK team, Bill saw the potential of using the MAK ONE environment to create an easy-to-use and realistic CFF trainer that can be used by an individual trainer at their home station or as part of a formal CFF classroom environment.

Applied Virtual Simulation (AVS) joins the MAK family as an official distributor!

MAK is thrilled to add AVS to our worldwide team of distributors to support our Australian MAK users! We're proud to have a team of partners that have deep knowledge about our software, speak your language, and can help you build the solution you want. No matter where you are in the world, you're never far from MAK.

Here's a bit about AVS...

Applied Virtual Simulation (AVS) is a leading provider of simulation-based training systems to the Australian Defence Force (ADF).

Tech Tip: Starting MAK ONE Applications on Windows 10

Here's a helpful tip to transition MAK ONE Applications from Windows 7 to Windows 10. We discovered that the Windows 10 "All apps" menu does not support the folder structure that we have been using to organize startup shortcuts for our applications, documentation, and tools. Everything gets dumped into a flat list under All apps > MAK Technologies. This makes finding the application you want to run tedious at best and confusing at worst, particularly if you have multiple versions of an application installed.

Fortunately, there is a fairly simple way to work around this problem. You can create a toolbar that points to the MAK shortcuts folder.

To do this: